What is ETL Testing

Let's get right into it!

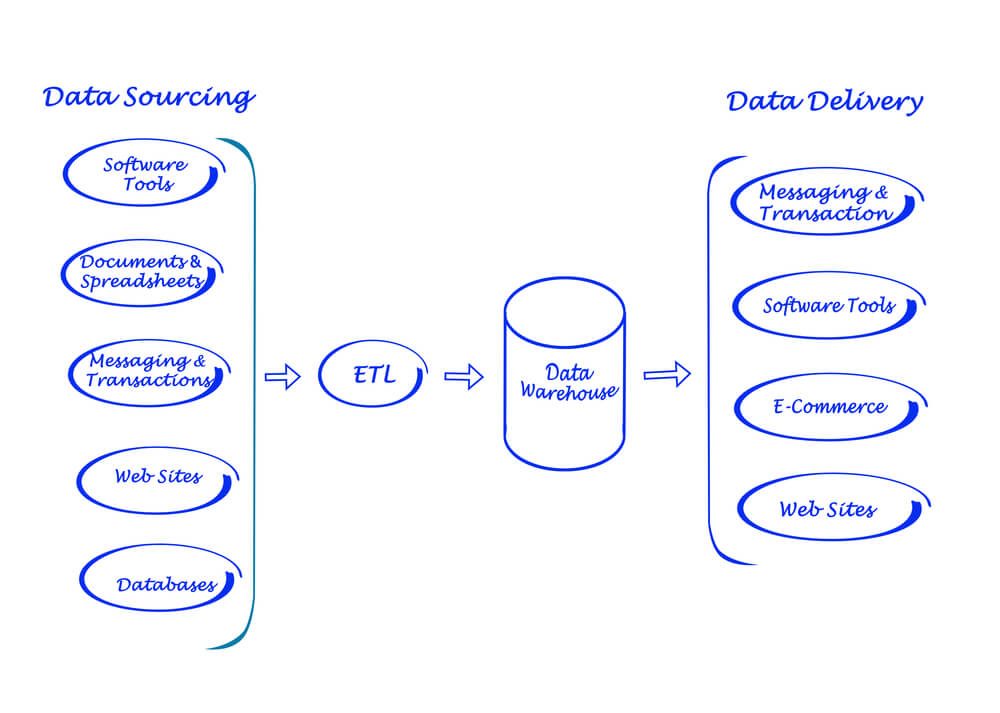

An ETL test is executed to ensure that data loaded into a data warehouse system is accurate after a data transformation process is complete.

FYI: Most of the information in this post taken from an online event I ran for QuerySurge all about ETL. So you know you are getting real advice from real ETL practitioners.

What is ETL

But before we get any further, you may want to know what ETL is and what it stands for.

ETL stands for Extraction, Transformation, and Load (ETL).

During your research, you might also hear about Data Warehouse Testing.

I always find it helpful to look at job descriptions to find out what skills and tools companies are looking for when hiring an ETL tester.

This gives a good indication of what is in demand and what you should focus on learning.

Here is a typical description for data testers:

ETL testers are responsible for ensuring the accuracy and reliability of an organization's data movement and transformation processes. This typically involves writing and executing test cases, analyzing test results, and working closely with developers and other stakeholders to identify and resolve issues.

Typical responsibilities of an ETL tester include:

- Designing and implementing test strategies and plans for ETL processes

- Writing and executing test cases for ETL processes using tools such as SQL, JUnit, and Selenium

- Validating the accuracy and completeness of data extraction, transformation, and loading operations

- Identifying and reporting defects and issues in ETL processes and working with developers to resolve them

- Collaborating with project managers, business analysts, and other stakeholders to understand requirements and ensure that ETL processes meet business needs

- Participating in reviews and inspections of ETL processes and providing feedback on quality and compliance

- Maintaining and updating test documentation, such as test plans, test cases, and bug reports.

- Supporting the continuous improvement of ETL processes, including adopting new technologies and best practices.

What are the three stages defined in the ETL pipeline?

The ETL pipeline, a dynamic process in data management, is composed of three distinct stages: Extraction, Transformation, and Loading. In the Extraction stage, data is harvested from a variety of sources. The Transformation stage is where the magic happens, with the data being cleansed, restructured, and molded to fit the desired format. Finally, in the Loading stage, the transformed data is loaded into a target database or data warehouse, ready to be harnessed for analysis, revenue generation, or data mining.

So, for example:

- Various source systems are coming together, maybe into a big data lake

- Data moving from the data lake to a data warehouse and perhaps a data mart

- Data from the data warehouse/mart ending up in a business intelligence report

How does ETL test automation differ from automated testing in development scenarios?

ETL test automation significantly differs from automated testing in development scenarios. In automated testing for web or mobile applications, the focus is often on validating the functionality and input data based on predetermined criteria.

However, ETL test automation involves verifying data quality and ensuring the data's accuracy and relevancy. Data testing in ETL scenarios is complex and requires a different approach than traditional automated testing.

Validating data integrity, identifying errors, and handling unpredictable data patterns are crucial aspects of ETL testing automation. Unlike standard automated testing in development, ETL testing deals with various data sources, transformations, and quality checks, making it more intricate.

Furthermore, the nature of data processing in ETL testing adds another layer of complexity. Unlike typical web or mobile applications with relatively controlled user input, ETL processes deal with diverse data sets that may not follow a straightforward validation pattern.

This complexity in data handling necessitates specialized tools and approaches for ETL test automation to ensure comprehensive testing coverage.

Overall, ETL test automation requires a specific focus on data quality, error handling, and transformation logic, setting it apart from the automated testing practices commonly used in development scenarios. The evolving landscape of ETL testing tools and methodologies indicates the recognition of the unique challenges posed by data-centric testing and the growing importance of ETL test automation in ensuring overall system reliability and performance.

What is Data Warehouse Testing

Testing a data warehouse ensures that it is accurate, reliable, and complies with the company’s data framework. The primary purpose of data warehouse testing is to ensure that the integrated data from the data warehouse is sufficient and data is correct for any given company.

How Do You Test Data Transformations?

When companies move data from many different heterogeneous sources to their data warehouses, whether on-prem or in the cloud, they typically use ETL testing tools to process the information and transform it into the correct format.

Testing is critical to ensure the data migration works according to the requirements and that the data is in the right place at the right time; as they say, Data is the new gold.

Think of data validation testing as functional testing for data with a combination of performance testing. You're verifying the data from your sources through a transformation logic into the target databases or target data warehouses and validating that the data is okay and made it there correctly.

In a nutshell, ETL is a process that extracts data from source systems, transforms the information into a consistent data type based on business rules, then loads the data into a single repository. It's essentially data validation, verification, and qualification, ensuring no duplicates or data are lost.

Network with other Testers in Our Community

ETL Process

The ETL process is commonly used in data integration and data warehousing to move and manipulate data from multiple sources into a single, central repository. The process aims to make the data more accessible, understandable, and useful for downstream analysis and reporting.

As Bill Hayduk, the founder of RTTS, explained in our TestGuild Automation Podcast interview, the three main steps in a process are:

| Step | Description |

|---|---|

| 1. Extract | Data is extracted from various sources, such as databases, flat files, or other systems. |

| 2. Transform | The extracted data is cleaned, standardized, and transformed into a suitable format for the target system. |

| 3. Load | The transformed data is loaded into the target system, such as a data warehouse or analytics platform. |

Did you know:

Data quality costs (companies) an estimated $14.2 million annually – Gartner.

How does ETL Testing Work?

There's often a position called ETL Developer or Business Analyst. They'll use things like a mapping document and mapping requirements like functional data requirements. They then take the data sources and, based on the requirements, will check if the data matches what is expected.

For instance, is there a first name and last name in the same field? If so, split the value into two fields based on the mapping requirements. Once the data is transformed into the expected output, they’ll move it to the new target database data warehouse.

To recap, testing can be broken down into five main stages:

- Determine your requirements and the data sources you need to work with

- Import the data

- Leverage-coded business logic

- Process and populate data to the correct target

- Generate reports

According to Gartner, the average company has about 100 data sources in one data warehouse. Many larger enterprises have not just one but 30, 40, or even 50 data warehouses. That means that a lot of data is moving in and growing exponentially.

Why do we use ETL and what are its benefits in data processing?

Here are a few of the benefits of this approach:

- Allows validating that data moves as expected based on mapping requirements between source and target systems

- Enables testing data quality and identifying issues as data flows through the pipeline from source systems to end analytics reports

- Facilitates automating data validation tests and integrating them into DevOps processes and tools for continuous testing

What are the challenges of ETL?

“75% of businesses are wasting 14% of revenue due to poor data quality,” according to Experian Data Quality.

Identifying challenges early in the ETL process is vital to prevent bottlenecks and costly delays.

There are also frequent requirement changes in the bulk of projects these days, which may lead to ETL testers working thoroughly and repetitively on the logic of the scripts. In that case, creating data-mapping documentation shared within the team is best to allow precise business requirements from scratch.

Here are a few other challenges to watch out for:

- Data that is lost or corrupted during migration

- Limited availability of source data

- Underestimating requirements

- Duplicate or incomplete data

- A large volume of historical data makes ETL testing in a target system difficult.

- Unstable testing environment

- OutdatedETL tools in use

This brings us back to the topic of ETL testing and its importance as follows:

- Without ETL automation testing, there is no way to know that the process is built to the specifications and as per requirements.

- Without ETL testing, the code cannot be released or deployed in production.

- ETL testing enables root-cause analysis to identify issues due to the source data or the ETL process.

- It is very expensive and challenging to fix data issues in production. ETL testing ensures that the data issues are caught early in the development lifecycle.

In DataOps or DevOps for data, you get quality at speed if you remove human intervention and try not to let testing become a bottleneck.

So you add automated testing, so the code used to make intelligent decisions on whether to promote something is the same in the data space. This is a growing space due to the rise of big data.

ETL for Big Data

Big data is a combination of structured, semi-structured, and unstructured data collected by organizations that can be mined for information and used in machine learning projects, predictive modeling, and other advanced analytics applications.

Systems that process and store big data have become a standard component of data management architectures in organizations, combined with tools that support big data analytics.

Big data is often characterized by the three V's:

- the large volume of data in many environments

- the wide variety of data types frequently stored in big data systems

- the velocity at which much of the data is generated, collected and processed

Companies use big data in their systems to improve operations, provide better customer service, create personalized marketing campaigns and take other actions that can ultimately increase revenue and profits. Businesses that use it effectively hold a potential competitive advantage over those that don't because they can make faster and more informed business decisions.

For example, big data provides:

- Valuable insights into customers that companies can use to refine their marketing, advertising, and promotions to increase customer engagement and conversion rates

- Both historical and real-time data can be analyzed to assess the evolving preferences of consumers or corporate buyers, enabling businesses to become more responsive to customer wants and needs.

- In the energy industry, big data helps oil and gas companies identify potential drilling locations and monitor pipeline operations; likewise, utilities use it to track electrical grids.

- Financial services firms use big data systems for risk management and real-time market data analysis.

- Manufacturers and transportation companies rely on big data to manage their supply chains and optimize delivery routes.

- Other government uses include emergency response, crime prevention, and smart city initiatives.

What is Business Intelligence (BI) software?

19.2% of big data app developers say the quality of data is the biggest problem they consistently face.” by Evans Data Corporation.

Business intelligence (BI) software applications help collect and process large amounts of data from internal to external systems and provide insights such as:

- Reports

- Dashboards

- Data visualizations

- Ah-hoc analysis

- Online analytical processing

The main task of a BI application is to query and report. All help improve decisions, efficiency, new opportunities, market trends, and operational efficiency.

ETL Database Testing Tools

One of the most common solutions for testing all the data scenarios we've covered is QuerySurge.

QuerySurge is the smart Data Testing solution that automates the data validation and ETL testing of Big Data, Data Warehouses, and Business Intelligence Reports. QuerySurge ensures that the data extracted from data sources remains intact in the target data store by quickly analyzing and pinpointing any differences.

Types of ETL Testing QuerySurge can help you with many quality testing activities like:

- Continuously detecting data issues in the delivery pipeline

- Dramatically increasing data validation coverage

- Leveraging analytics to optimize your critical data

- Improving your data quality at a speed

- Providing a huge ROI

- Developing transformation rules

- Warehouse testing

- ETL Integration Testing

- Data Regression Testing

Examples of ETL Testing Tools

| Tool | Description |

|---|---|

| Talend | An open-source ETL tool that offers a wide range of connectors and data transformation capabilities. |

| Informatica PowerCenter | A commercial ETL tool that offers a graphical user interface and support for multiple data formats and platforms. |

| IBM InfoSphere DataStage | A commercial ETL tool that offers parallel processing capabilities and support for big data environments. |

| SAP Data Services | A commercial ETL tool that offers support for data quality, governance, and integration with other SAP products. |

| Pentaho Data Integration | An open-source ETL tool that offers a graphical user interface and support for big data environments. |

| QuerySurge | A robust and scalable data testing solution that helps organizations ensure the accuracy and reliability of their data integration and migration processes. |

These are just a few examples of popular tools for ETL testing. Many other options are available; the best choice will depend on your specific needs and requirements.

I recently interviewed Bill Hayduk, the founder of the software and services firm RTTS, the maker of Querysurge.

He explained that QuerySurge is an enterprise application initially launched at Oracle Open World in 2012 with a user base of almost 200 corporate customers in 40 different countries.

QuerySurge was developed to automate an ETL testing process, big data testing, NoSQL or Hadoop data stores, BI report testing, and more, including:

- Data migrations

- Full DevOps compatibility

- ERP and enterprise applications

It automates the process of data, similar to the way Selenium automates functional testing.

QuerySurge is a robust and scalable data testing solution that helps organizations ensure the accuracy and reliability of their data integration and migration processes. With QuerySurge, you can automate data flow testing from a wide range of sources and targets, including databases, files, and cloud platforms.

QuerySurge uses advanced algorithms and machine learning to identify and report potential data issues, such as incorrect or missing values, data truncation, and data type mismatches. It also includes features that simplify and accelerate the testing process, such as customizable test templates, visual comparisons of expected and actual results, and automatic test execution and scheduling.

QuerySurge is designed for teams of all sizes and experience levels and includes a friendly and intuitive user interface that allows you to create, execute, and analyze tests quickly and easily. It is used by leading organizations in various industries, including finance, healthcare, retail, and telecom, to improve the quality and speed of their data-driven initiatives.

See QuerySurge in action yourself.