Today Lisa Cripin will wrap up her three-part series on Automation Modeling.

If you haven’t already, check out her two previous posts on:

- Part 1: Modeling Your Test Automation Strategy

- Part 2: Why You Should Explore More Test Automation Models

For the third installment, Lisa will share tips on how to keep up your whole-team engagement in automation.

One more time – take it away Lisa.

Fighting Automation Resistance

In my final installment in this series, I’ll describe some benefits of diverse skill sets and perspectives as you turn your strategy into action.

You’re likely to run into resistance from some team members or managers, especially anyone who doesn’t experience the pain of manual regression testing first-hand. But you can turn that resistance into potential help.

Choosing the right tools for your context is a big part of a successful whole-team strategy. So is setting short-term goals and using small experiments to learn. Getting even small test suites into your continuous integration pipeline will produce benefits right away!

But first, let’s remember why we even automate.

Remembering Why We Automate

Chapter 4 of Accelerate: Building and Scaling High-Performing Technology Organizationsby Dr. Nicole Forsgren, Jez Humble, and Gene Kim explain how test automation practices predict IT performance. Their research includes five years of data from the State of DevOps Surveys.

To sum it up briefly, high-performing teams have reliable automated tests, which run with every commit of a code change.

The team is confident that any test failures mean there is really a defect in the code.

Developers primarily create and maintain acceptance tests, and can easily reproduce and fix problems in their own development environment. This means that developers write more testable code, using practices such as test-driven development (TDD). It also means that developers put more care into the automated tests since they are responsible for maintaining them.

As the book says, “None of this means we should be getting rid of testers”. Testers help create and evolve suites of automated tests alongside developers. They perform essential testing activities such as exploratory, usability and acceptance testing.

This whole-team responsibility for all testing activities, including dependable, maintainable automated test suites, is key to an organization’s ability to deliver value frequently and sustainably to customers.

Getting Off the Hamster Wheel

I'm sure you've already used a few of the visual models already covered in my previous post on modeling automation. If used correctly you should have noticed having more productive conversations among your team members about your strategy for test automation.

If so, now your team is ready to put your automation plan into action.

Congratulations!

You’ve focused on the right places to start automating. You’re ready to start your automation journey.

Keep that whole-team engagement!

But how?

For starters follow my three-phase approach for maximum success:

1) If you haven’t done this already, before each release to production, divide your manual regression test scripts up among everyone on the team, including developers, product owners, designers, system administrators, whomever, and share the pain.

2) Set short-term, attainable goals together, and design experiments to achieve them.

3) Find ways to measure progress so that you can adjust your strategy if needed. For example, if you gave yourselves two weeks to select automation tools and you are struggling to make a choice, you might need to step back and spend more time deciding what your requirements for a tool are.

When You Face Automation Testing Resistance

When you try to get team members involved in sharing the manual regression testing pain or starting to automate tests, you might hear someone say things like:

- That’s not my job

- We don’t have time for that, we have to get this feature out

- It’s fine to keep doing manual regression testing

You could try explaining all the benefits of automation. But we humans aren't always swayed by logical arguments. We tend to prefer working more from our values.

No worries.

Instead, listen to the skeptic. Ask them to explain their thinking. As they keep talking about it, they might find some areas where they don’t feel so confident about their stance. See if you can get them to agree to try a small experiment.

Ask them for help – once we help someone else, we tend to keep treating them as an ally. Show skeptics what’s in it for them.

Keep Your Automation Efforts Visible

Make big visible charts showing your goals and update them to show progress as you go.

As soon as you have tests running in your continuous integration, make sure those show up on wall monitors and Slack notifications.

If you're working in a distributed team, use online dashboards.

Building your toolbox

There’s always a temptation to go with the latest and greatest automation tool (“That will look great on my resumé!), or the most popular one (“Nobody can criticize me for choosing that one!”)

If your budget is small, you might be tempted to limit your search to open source tools. But remember, there is a cost to learning a tool, as well as to maintaining the tests and analyzing test failures.

Instead, start by getting your team together to think about how you want your tests to look.

Remember, automated tests serve as living documentation, always up to date. You should be able to read them and know how your system works. Who will be writing the tests, what will they feel comfortable writing? Who needs to be able to understand them? Who will take action when tests fail in continuous integration (CI)?

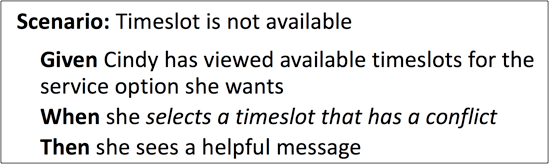

Non-coders may find a domain-specific language such as Gherkin easiest to use for specifying tests. Something along these lines, while not exactly the way we have conversations, is understandable to people in the company:

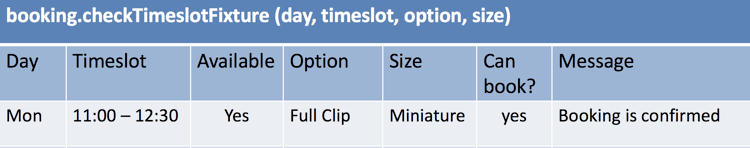

Some businesses use spreadsheets a lot, and find a tabular format easy to follow:

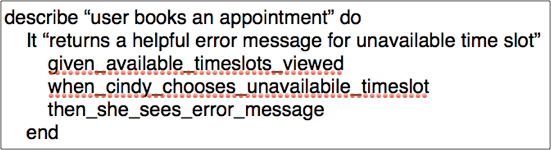

I’ve worked on a team developing a product for use by other development teams, and only coders created, maintained and read the tests. It was fine and saved time to have them directly written in a programming language syntax:

Budget time to gather your requirements and constraints for selecting tools.

- Is your architecture a monolith, or microservices? Different types of testing are required.

- Do you want a tool that can support exploratory testing or other activities by generating data or getting the application to a certain state?

- Do data-driven tests using tables of inputs and expected outputs work for your product?

- Have some of your team members used keyword-driven tests before and prefer that approach? If you have a legacy code base, you may have some constraints that greenfield projects don’t have.

Discuss all this together and figure out your “must haves”.

Here’s an example of what a team might look for in an automation tool:

- Supports collaboration among developers, testers and product owners

- Uses the same programming language as our application does

- Will provide adequate regression testing protection for the next five years

- Has easy to understand result reporting, it is easy to diagnose test failures

- It is easy to integrate with our existing continuous integration tool

We’re fortunate to have so many test tools available today. Budget time to do your research and try them out.

On one of my teams, we needed an automation library and framework to support our newer UI code, which had more business logic than previously. Our system administrator volunteered to do a proof of concept on a popular driver, and the results were positive.

We wrote stories to research automation frameworks to use with that driver library and spent a couple of iterations doing that along with our regular work. Then we had a series of “bake-offs” where we automated tests for the same story in two different frameworks to see which worked better for us.

It was a major investment.

When you consider that our previous test automation tool had served us well for eight years already, it’s an investment worth making. That same team is still using the tools we chose today, eight years later.

Evolving your models

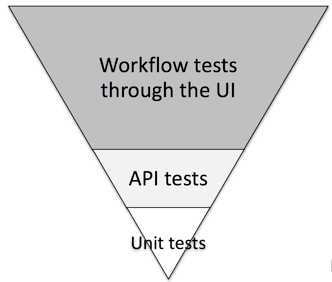

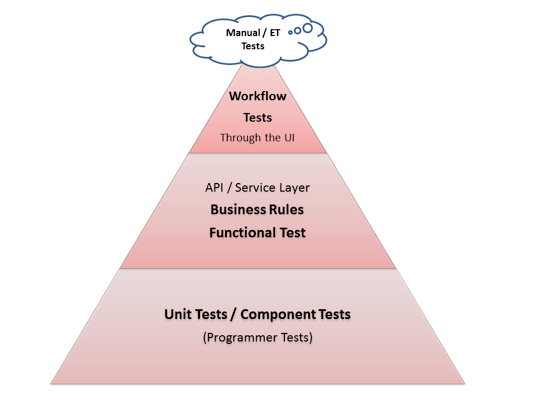

It’s typical for teams to start with an “upside-down” test automation pyramid. This can be a viable approach. Automate some happy-path smoke tests at the UI level to cover the most critical areas of the application.

This cuts down on the time spent doing manual regression testing, so the team can invest time to learn how to write unit-level tests.

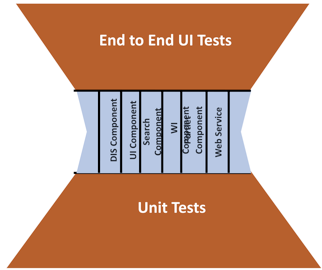

After they get traction on this base layer that provides the foundation for automation in most contexts, their “pyramid” turns into more of an “hourglass”:

This might work fine in certain situations. Or, the team may find they can fill in more API and service-level tests to move to the right-side-up triangle shape:

Look for quick wins

Remember: at first, test automation is simply more work on top of the work you’re already doing. It takes time to build the skills, as well as building up a library of reusable automated test components. Stick with it through this painful period.

Eventually, the team will build enough expertise and have lots of reusable pieces and parts so that automation starts to save effort. Now you have extra time you can start using other valuable activities.

As your team talks strategy, look for the best place to start automating. Maybe there are areas of the application that historically have had a lot of bugs. They aren’t showstoppers, but they’re annoying and time-consuming to fix. Automating regression tests there could add a lot of value quickly.

Maybe there are areas of your code that are designed more for testability, and automation would be really easy there.

It’s a good idea to go for the quick wins. It will be easier to learn automation skills and see the value.

Richard Bradshaw recently wrote about the lack of visualization for testability when we look at test automation models like the pyramid, so you may want to draw that into the visual model you’re using for talking about your strategy.

Or, if there are highly critical areas of your app where the risk factors of probability and impact are great, that might be the place to start automating.

Collaborate & keep it simple

As your team starts planning each new feature, ask yourselves how you will automate tests for it. Work in small increments – automate a test or two, add it to a test suite in your continuous integration, see what benefits occur. Keep your tests as simple as possible.

Remember to treat your test code with the same care as the production code. Use good coding and test automation practices and patterns.

Pairing can help team members build skills. As a tester, I like to pair with a developer. I’m good at specifying tests, she’s good at writing really great, maintainable code. We learn from each other. I prefer strong-style pairing, so that if there’s something I don’t know how to do, my pair can navigate me through it, and vice versa.

Mobbing is another super effective way for a cross-functional team to get traction on test automation. The mob can create both the production code and the automated tests together. Everyone you need is in the room, all questions can be answered right away!

Experiment & celebrate

If your initial strategy isn’t moving you towards your initial goal, brainstorm together to think of a new approach to try for the next couple of weeks. See if other visual models, from Parts 1 and 2 of this series or something you come up with yourselves, could help the team see things from a new perspective.

Remember to celebrate every small success! If you had no automated tests before, and you run your first automated test suite in your continuous integration pipeline – even if it’s just one test! – go out for a treat, play some pong, whatever your team enjoys together.

On one of my own teams where we started out with no automation, we had a team party when we reached 100 JUnit tests. When we got to 1,000 JUnit tests, we had a party for the whole company and explained the value of the unit tests to the business folks.

Don’t worry about failed experiments or setbacks. That’s how you learn. Better to fail fast and learn fast! Just keep on working towards your attainable, measurable goals with small, frugal experiments.

Testing for many purposes

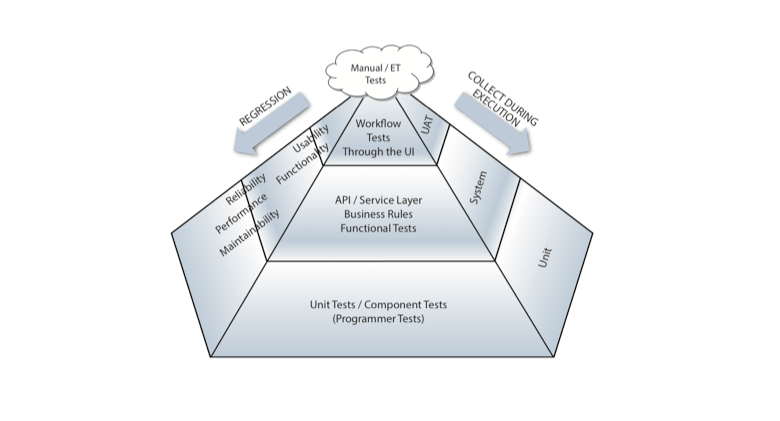

Here’s one more adaptation of the test automation pyramid to help you think about automation.

We test to learn about our application, and there are so many different areas to cover. Sharon Robson’s pyramid includes the original one showing the layers and what they’re designed to test. She adds the tools used to test on the right-hand side of her three-dimensional pyramid: system testing for testing functionality and business rules in the middle layer, and user acceptance testing at the UI level.

This means the team needs to have the right types of tools for the people doing testing at each level. For example, a capture/replay type automation tool could help the users doing UAT. Unit level test tools would be specific to the technologies being used and integrated with the CI.

Expanding the pyramid this way helps everyone understand there’s not one tool that will fill every need. Sharon advises mapping the tool to the tester as well as the testing.

The third side of Sharon’s pyramid, on the left in the picture, has an overlay of test types or system attributes. That helps the team be sure all necessary types of testing are covered.

This model provides a detailed guidance around planning for all necessary test automation activities, getting appropriate tools for the type of testing and the people doing the automation, and building in testability. Note that regression testing may be needed for any of these quality attributes.

Get started!

I’ve covered a lot in these three blog posts. Test automation can seem like an overwhelming effort. The payoff is even bigger, once you get over that initial learning curve. You’ll save so much time, and you’ll put that time to better use with more valuable activities.

Get your team together, identify your biggest pain point that automation could help, and start small.

Use visual models to have conversations about why you are automating, who could help within and outside of your team, how people with different skills can help, and what your next action items are.

Do small experiments, track your progress, keep making small improvements.

You’ll get there!

About the Author Lisa Crispin

Lisa Crispin is a Test Advocate at Mabl as well as the co-author, with Janet Gregory, of More Agile Testing: Learning Journeys for the Whole Team (2014), Agile Testing: A Practical Guide for Testers and Agile Teams (2009), co-author with Tip House of Extreme Testing (2002). Lisa was voted by her peers as the Most Influential Agile Testing Professional Person in 2012. Lisa enjoys working as a tester and sharing her experiences in the agile and testing communities. Please visit www.lisacrispin.com for more.

Resources for more learning

- “Keep your automated tests simple and avoid anti-patterns”, Lisa Crispin, https://www.mabl.com/blog/keep-your-automated-testing-simple

- “Test automation: Five questions leading to five heuristics”, Joep Shuurkes, https://testingcurve.wordpress.com/2015/03/24/test-automation-five-questions-leading-to-five-heuristics/

- “Powerful test automation practices”, parts 1 and 2, Lisa Crispin and Steve Vance, https://www.mabl.com/blog/powerful-test-automation-practices-pt-1, https://www.mabl.com/blog/powerful-test-automation-practices-pt-2

- “Test Suite Design”, Ashley Hunsberger, https://github.com/ahunsberger/testSuiteDesign

- Accelerate: The Science of Lean and DevOps, Nicole Forsgren et al, https://itrevolution.com/book/accelerate/

- Agile Testing: A Practical Guide for Testers and Agile Teams, and More Agile Testing: Learning Journeys for the Whole Team, Lisa Crispin and Janet Gregory, https://agiletester.ca

- “Analyzing automated test failures”, https://www.mabl.com/blog/lisa-webinar-analyzing-automated-ui-test-failures

- Strong-Style Pair Programming and Mob Programming Guidebook by Maaret Pyhäjärvi, https://leanpub.com/u/maaretp

- “Testability AMA” with Ash Winter and Vernon Richards, https://www.ministryoftesting.com/dojo/lessons/testing-ask-me-anything-testability-ash-winter?s_id=43200

- Team Guide to Testability, Rob Meaney and Ash Winter, https://leanpub.com/softwaretestability