I’m excited to publish part two of Lisa Crispin's (Mabl) three-part series on modeling your test automation strategy.

Check out part one Test Automation Modeling: Start the Conversation if you missed it. Now back to Lisa:

In the second part of this three-part series, we’ll revisit the purpose of using visual models to help formulate an automation strategy.

In Part 1, we looked at the test automation pyramid and other pyramid and triangle shaped models.

In this post, we’ll check out quadrant models to help plan automation, risk-based models to help prioritize where to automate, band-pass models to think about automated test coverage, and some more triangular-shaped models. These all help you ask good questions about your automation goals and how to achieve them.

All Test Models are Wrong

The statistician George Box is famous for saying “all models are wrong”, though he might not have originated that quote. He was talking about statistical models, though it applies to scientific models in general.

Remember, models, are a thinking tool, not a rule.

We can use them to stimulate conversations, think through ideas generated by those conversations, and design experiments to test out our ideas.

It seems to be popular these days to attack Mike Cohn’s test automation pyramid, which I covered in Part 1 of this series.

In my opinion, these people are totally misunderstanding the model.

They think it calls for a specific percentage of automated regression tests at the unit, API/services, and UI/workflow level.

This is not the case.

The pyramid model is a thinking tool to get your team looking at ways you can potentially push as many tests down to a lower level as makes sense. It helps you focus on what really needs to be tested and how to do that with the fastest feedback and least pain. It doesn’t apply in every context, which is why we see lots of adaptations of it as well as many other types of models.

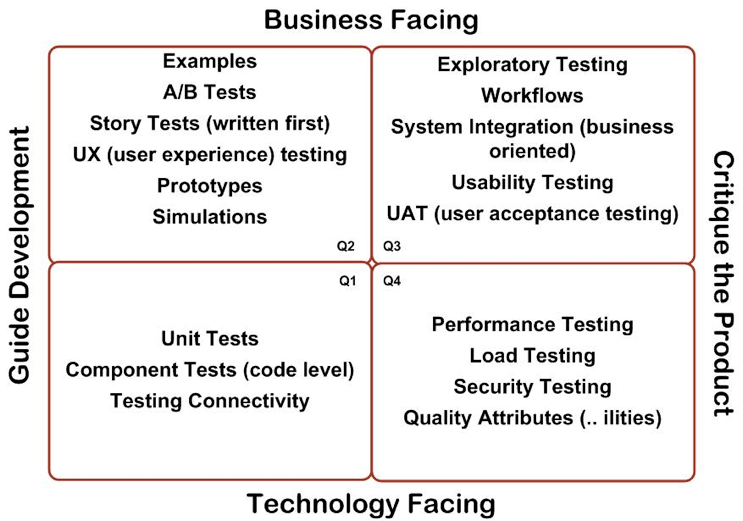

Quadrant models

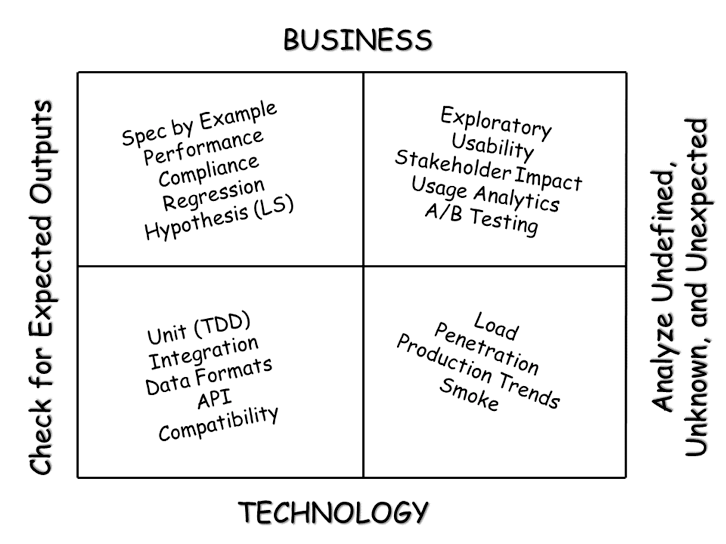

Janet Gregory and I based our agile testing quadrants on Brian Marick’s.

Brian came up with his idea of customer-facing tests versus technology-facing tests, and tests that support the team versus tests that critique the product, back in 2003. Janet remembers sitting with him late one evening at a conference, drawing quadrants on a napkin.

With Brian’s blessing, we have made some tweaks to the model over the years.

The quadrants are intended to be a thinking tool, a taxonomy of tests that may need to be done at some point as we design, build and deliver features incrementally and iteratively. There are no hard-and-fast rules about what types of tests belong in which quadrant, and certainly no implication of what order they should be done in.

The quadrants apply to all kinds of testing activities, not only automation, but they’re useful for thinking about what tests we want to automate when we want to automate them, and who might do that automation.

Quadrant 1: Technology-facing Tests that Guide Development

In Quadrant 1 (we numbered the quadrants for convenience, it does not relate to the order in tests are done) are the automated unit tests. These play an important role in code design, especially for teams practicing test-driven development, they aren’t a testing activity. They do have a nice by-product of serving as automated regression tests as soon as the code is complete.

Because these are all about ensuring code correctness, they must be written by the same people who are writing the production code, bit by bit: A failing unit test for a bit of a feature, then enough code to make it pass, repeat with the next bit.

Quadrant 2: Business-facing Tests that Guide Development

Many of the Quadrant 2 tests are inherently manual, such as prototypes and examples. Talking through the examples, prototypes, mockups and business rules helps us achieve shared understanding across the delivery and customer teams.

When we turn examples into executable story tests, they help us know what code to write, know when we are done with the story, and like unit tests, they have the nice side-effect of turning into automated regression tests which are kept passing with every build after that.

In my experience, this type of automation is best done as a collaboration between testers, developers, and possibly others such as designers and database experts.

Quadrant 3: Business-facing tests that critique the product

Once we have a testable artifact we can deploy somewhere for testing, we can start evaluating whether the feature behaves per our shared understanding of how it should behave, whether we’ve missed out on some capability, or other learning. Anyone on the team can jump in to do Quadrant 3 activities such as exploratory testing, workflow testing and the like.

User acceptance testing falls into this category. We may use automation to enable manual testing here, for example, populate test data, or get the system into a particular state for exploration.

Quadrant 4: Technology-facing tests that critique the product

Don’t be fooled by this “last” quadrant, you can’t necessarily wait until a finished feature is delivered to do these testing activities. Performance, reliability, security and other quality attributes may be more important to the business than “functional” behavior and may need to be tested first.

For example, I’ve frequently been involved with doing a spike on a new system architecture with throw-away code and then doing performance testing to make sure it scales. If it does, we start building the system using good technical practices.

Some people feel intimidated by the automation in this quadrant if they aren’t familiar with it. Just take it step by step. Your team may need to devote some time to learning, invest in some good tools, and plan for this testing up front so you don’t get caught right before release with a showstopper problem.

This quadrant needs an update in our visual because it also includes the monitoring, observability, and analytics that are crucial in today’s DevOps and continuous delivery world.

Instrumenting code so that every event can be identified in log files, as well as the process of gathering and analyzing that log data, isn’t test automation, but it’s definitely automation that helps us learn what we need to know to properly focus our testing.

A Different Take on the Quadrants

A few years after Janet and I started using our Quadrants, Gojko Adzic felt they didn’t meet the needs of a lot of teams.

He raised awareness about a whole category of things that don’t fit into typical test plans or reports, but are super valuable, in the same way, that “Quadrant 2” raised awareness of things like specification by example and prototyping.

Gojko added a new horizontal axis, checking for expected outcomes on the left, and analyzing the unknown/undefined/unexpected on the right. It makes sense to automate for requirements such as performance service-level agreements and regulatory compliance on the left.

As with Janet’s and my Quadrants, automation can be used for at least some activities in every quadrant. The instrumentation and logging that helps us get so much useful data about production use and uncover unknowns in production fits on the right side.

Risk-based Automation

Regression test automation acts as a safety net, giving us immediate feedback when the team makes a change that breaks something customers are already using. It lowers the risk of Bad Things happening in production.

Few if any teams can afford to automate All The Things at one time. It makes sense to make automation investments in the highest-risk areas of our app. There are lots of ways to analyze risk, considering the impact of a particular failure and how likely it is to occur.

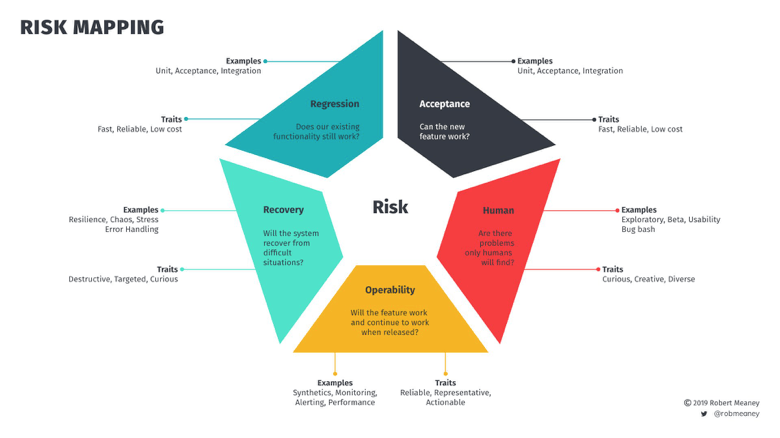

Rob Meaney’s Risk Mapping

Rob Meaney’s team drives their test approach by risk. They use a pentagonal model where each section answers a specific question.

Rob’s model covers more than functionality. Architecture, data integrity, I/O, monitoring and observability are a few of the diverse areas included. For some of these, automation is appropriate. For example, regression and acceptance. Operability also involves automation, but a different kind.

Like I said earlier about Quadrant 4 in the Agile Testing Quadrants, there can be tradeoffs between automated tests and automation that helps us test in production.

You don’t need to copy this exact model, but thinking about risk is a useful way to build your automation strategy. Start drawing on a whiteboard and talking about it. What questions around risk does your team need to have answered? What test automation can help you get those answers? Who can help with these activities? Visualizing the risks helps you set priorities around what to automate, when.

Dependency Mapping

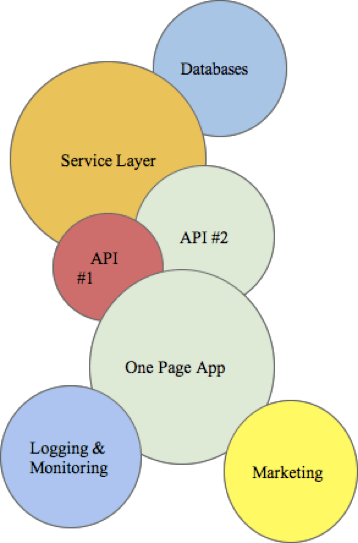

My previous team couldn’t automate tests for logging on to our app via Google, because Google would block us. We were aware of that gap in our automation and checked it manually as needed.

Dependencies on third party tools can raise a lot of questions when you start automating tests. Do you really need your test to interact with those external systems? Or can you fake, stub, or mock them out?

Melissa Eaden introduced me to dependency mapping. It’s simple: Draw a circle (or you can use any shape) representing the part of your app that customers interact with.

For example, if you have a web-based app, the circle represents the UI. Next, draw the other systems that piece depends on. Your UI probably uses one or more APIs, a server, a database, maybe external systems. It may link up to marketing pages and perhaps a link for customer support. You probably have logging and monitoring systems.

Draw all those, representing their importance with the size of the shape. These dependencies all represent some level of risk. If the marketing pages stop loading, what will happen to the product UI? What if the load balancer goes down?

Dependency mapping is a great way to identify relationships you need to build. (TIP: Katrina Clockie‘s Book A Practical Guide for Testing in DevOps book goes into a lot of detail about building those bridges.)

A whole team approach isn’t just the software delivery team. When I do dependency maps, I also like to include other teams that can help us identify what our tests need to cover. I can get a lot of information about what customers value in our product by talking to marketing and salespeople. I can learn about their pain points from customer support representatives. A database expert could walk me through performance risks related to database queries.

This simple brainstorming tool helps me think of the risks I hadn’t considered before. It’s even better if you do it together with other team members. It helps to identify gaps in test coverage, possible duplication of efforts, risks in the interfaces between systems, opportunities to speed up automated tests by faking, stubbing or mocking external components.

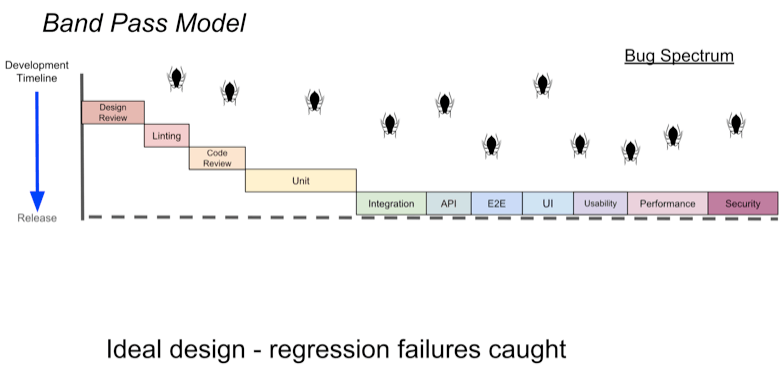

Band Pass Filter Models

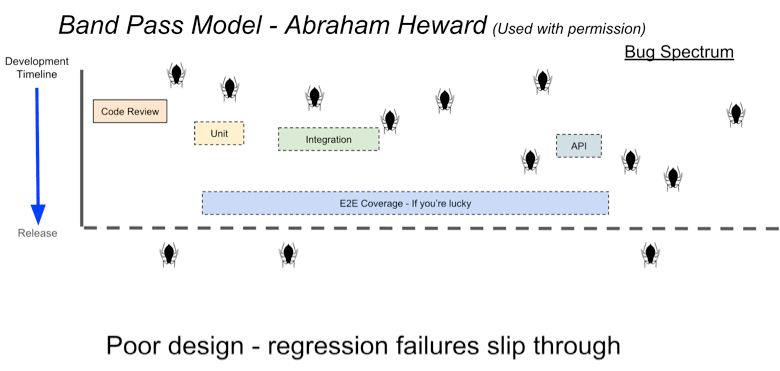

I looked up “band pass filter”, and it’s something to do with limiting the bandwidth of output signals on transmitters, but the idea here is to think about your test coverage based on what type of bugs you can filter out at different stages of testing.

Abraham Heward’s model

Abraham Heward uses his band pass filter model to illustrate a poor strategy design where bugs are able to slip through. There are big gaps between the activities and test suites that are intended to catch regression failures.

Our goal should be to tie these various activities together so that different types of regression failures at different levels are caught before release. Linting and code review can give the fastest feedback about potential regression issues.

Consciously designing tests at integration, API, end-to-end and other levels form a solid safety net without too much overlap. Automated tests for other quality attributes like usability and performance may be an essential part of the automation plan.

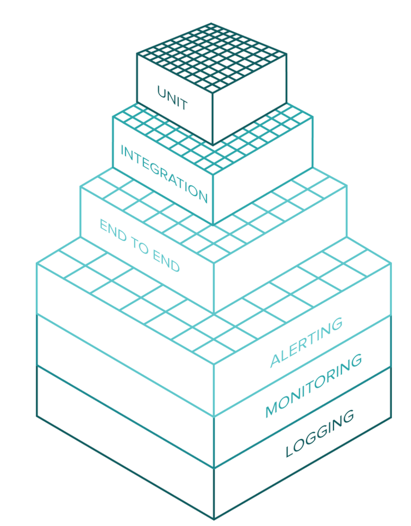

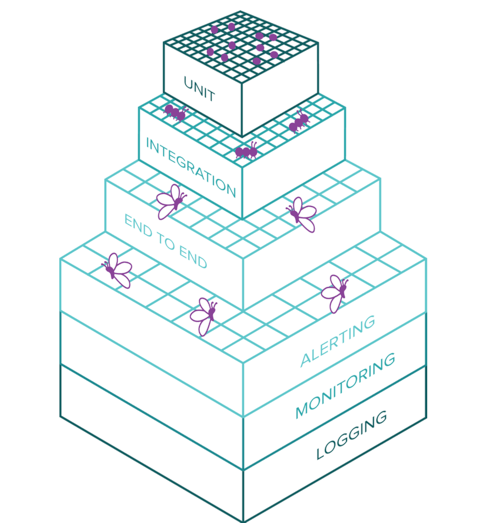

Katrina Clokie’s DevOps Bug Filter

In her book, A Practical Guide for Testing in DevOps Katrina Clokie presents a six-layer DevOps bug filter. The top three layers are for testing that occurs in the development environment: unit, integration, and end-to-end tests. The bottom three layers represent the information that is captured in production and can be used to detect bugs and determine product quality: logging, monitoring and alerting.

Imagine the layers as different filters for bugs. Unit tests and logging have the finest grain mesh to catch the smallest bugs. Integration tests and monitoring have a slightly wider weave. End-to-end testing and alerting have the widest mesh to capture only the largest problems. This reflects the fact that some bugs only occur as the application has to move through several layers.

The feedback loop becomes longer with the lower layers in this model, but it gives us more confidence that the whole system is behaving as expected.

Katrina imagines the bugs that drop through this filter as being butterflies in all stages of their lifecycle. Unit tests are going to capture the eggs — bugs before they develop into anything of consequence. Integration tests are going to capture the caterpillars. These may have arisen from a unit test egg that has hatched in the integrated environment or may have crawled into our platform via a third party system. End-to-end tests capture the butterflies.

Personally, I might have thought of them more as some more annoying kind of bug, because I like butterflies! But it’s a great visualization. Have some fun as you visualize your automation challenges and strategy!

Back to triangles

I’ll wrap up here with a couple more models that are triangular in shape. Both of these do a great job of representing different criteria for a successful automation strategy, depending on your context.

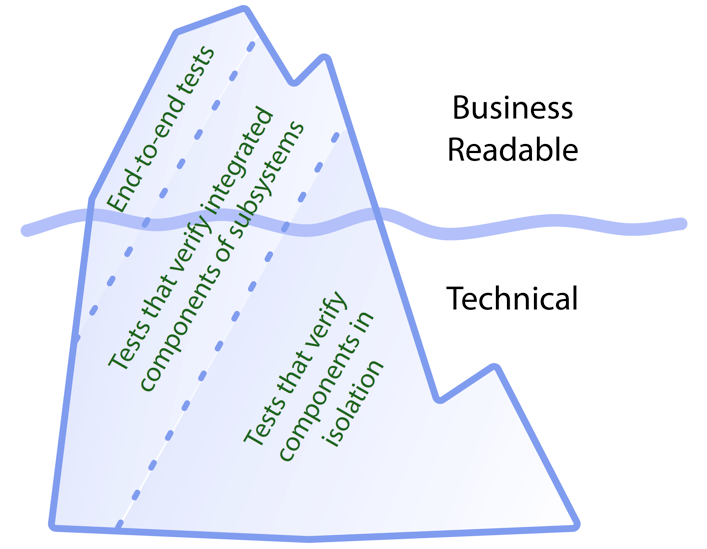

The Test Automation Iceberg

Our automated tests provide living documentation of how our system behaves. This documentation is always up to date, because our tests have to be passing every day! Documentation is only useful if the people who need it can understand it. If only people who write code look at our tests as documentation, it’s ok if the tests look like code. But if non-coders need to understand it, we may want our tests to be readable by them. Seb Rose’s test automation iceberg adds another dimension to the classic test automation pyramid.

Those portions of the iceberg above the waterline are business readable, while those below are not. We can map non-business-readable end-to-end tests to the submerged system test portion of the iceberg, which is very small because most end-to-end tests should be business-readable. Some projects may have specific technical concerns that can only be validated using a fully deployed system, and that are of no interest to business people, but these will be few and far between.

Remember, all of these are only models, there’s no “right” or “wrong”. They are thinking tools to help us have constructive conversations about our test automation approach.

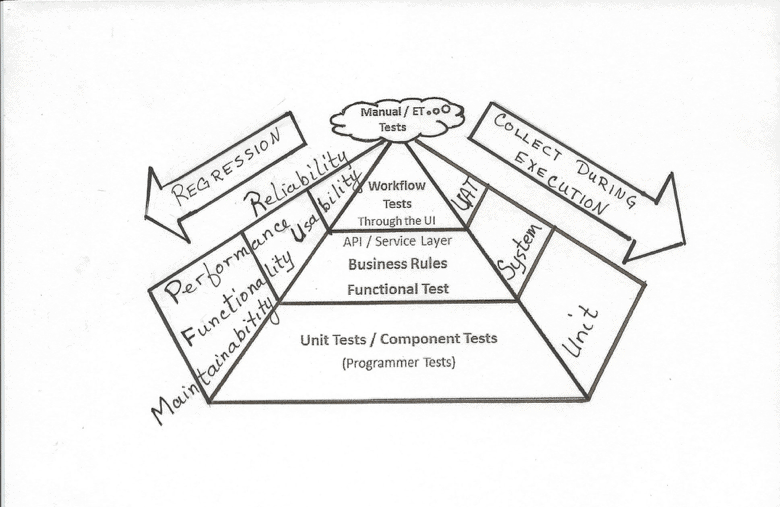

Sharon Robson’s Test Automation Pyramid

In my book with Janet Gregory, More Agile Testing, we were fortunate to have Sharon Robson contribute her four-step process she uses to talk about automation. She starts with Mike Cohn’s classic test automation pyramid but adds on the tools that can be used to execute the tests, the types of tests or system attributes to be tested, and finally, the automated regression tests needed.

Finally, we have a real pyramid! This is a wonderful example of how you can adapt and extend a model to give you more value in your own team’s context.

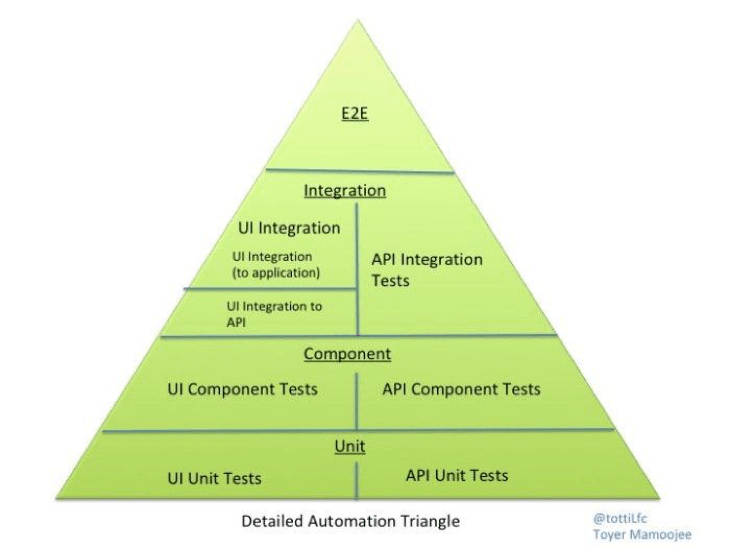

Toyer Mamoojee’s Triangle

I want to wrap up with Toyer Mammojee‘s “revisit” of the test automation pyramid, which as he notes is geometrically a triangle.

Toyer reminds us to use the model to ask good questions about the purpose of our test automation and our priorities around where we want to start automating.

Toyer cautions us that automation is not magic! You have to keep asking questions.

- What am I testing?

- Where do I want to automate?

- Are we automating just for the sake of it? Or just to fit the pyramid?

This model helps your team think about the big picture. The front end, back end, UI, API, logging. It helps with that mentality people like to call “Shift left”, but I like to call continuous testing.

Testers may do most of the UI component and integration testing, but may also have visibility into the unit and API tests written by developers. Understand your app and its inner workings when approaching your automation strategy.

Remember, this triangle, like all the other models, is just a guide. Get your team members together, ask questions, chat about answers, draw on the whiteboard, design experiments.

In my next installment, we’ll look at ways to implement the test automation strategy you build with the help of one or more of these visual models.

About the Author Lisa Crispin

Lisa Crispin is a Test Advocate at Mabl as well as the co-author, with Janet Gregory, of More Agile Testing: Learning Journeys for the Whole Team (2014), Agile Testing: A Practical Guide for Testers and Agile Teams (2009), co-author with Tip House of Extreme Testing (2002). Lisa was voted by her peers as the Most Influential Agile Testing Professional Person in 2012. Lisa enjoys working as a tester and sharing her experiences in the agile and testing communities. Please visit www.lisacrispin.com for more.

Resources

- More Agile Testing Chapter on using models available at https://agiletester.ca/more-agile-testing-the-book/

- A Practical Guide to Testing in Devops, Katrina Clokie, https://leanpub.com/testingindevops

- Discovery: Explore behavior using examples, Seb Rose and Gaspar Nagy, http://bddbooks.com/

- “Lower level automation and testing? Be more precise! The automation triangle revisited… again!” Toyer Mamoojee, https://toyerm.wordpress.com/2018/10/16/lower-level-automation-and-testing-be-more-precise-the-automation-triangle-revisited-again

- The Team Guide to Software Testability, Ash Winter and Rob Meaney, https://leanpub.com/softwaretestability

- “Using Dependency Mapping to Enhance Testing Techniques”, slide deck from European Testing Days 2019, Melissa Eaden and Lisa Crispin, https://drive.google.com/file/d/1HwRCpi91MeCgPleEyou4E14q3pJ6EIvg/view?usp=sharing