So, you finally have an automated test suite, and it's running in your continuous integration (CI) and continuous delivery (CD) systems.

Awesome, but now what?

Have you invested any time in your automated test reporting?

Automation, by definition, is supposed to make things easier, to simplify things for you so it can speed up repetitive, time-consuming manual processes.

But if you're like most of the testers I speak with on my automation podcast, your team is probably struggling with keeping flaky tests from failing the build.

The problem is the time spent debugging failing automated tests.

Is it a flaky script, a known issue, or a real bug?

That's where an intelligent, automated testing reporting solution can help.

In this post, you’ll learn:

- How an AI-based solution can help solve test automation issues

- How to setup AI automation reporting

- Challenges you’ll face in test reporting and analysis

INDEX

AI in Automated Test Reporting Analysis

AI Automation Reporting Setup

Challenges in Test Reporting & Analysis

Automation Test Run Artifacts in Zebrunner

Test Automation Flakiness Overtime Tracker

Test Reporting Helps Collaboration

Central Automation Test Results Hub

How to Report Test Execution Effectively

Test Ownership

Automation Report Projects View

Integrations with Other Automation Tools

Check Out AI Automated Test Reporting for Yourself

AI in Automated Test Reporting Analysis

AI has become a buzzword in the industry.

But after speaking with many AI automation experts in the industry like Nikita Sidorevich, a product manager at Zebrunner, I believe that there are tangible aspects of AI and machine learning that can help test automation engineers take care of their automation issues.

For example, when you have re-appearing issues causing your tests to fail.

Remember—when you have large test suites that run for enterprise, even a low failure rate like three percent translates to a lot of tests to have to debug and maintain.

You often have to process too much information—way more than you can keep in your head— and that's when machine learning can come in handy.

You can delegate many aspects of the analysis to machine learning.

By teaching the machine learning algorithms, you can train those networks. Eventually, you will see the benefits of incorporating machine learning in helping with reporting, especially in test run results reviews.

Let’s take a look at Zebrunner, an automated test reporting tool, to see how an AI-based solution can help with these issues.

AI Automation Reporting Setup

Zebrunner CE (Community Edition) is a Test Automation Management Tool for continuous testing and continuous deployment. It allows you to run various tests and gain successive levels of confidence in the code quality.

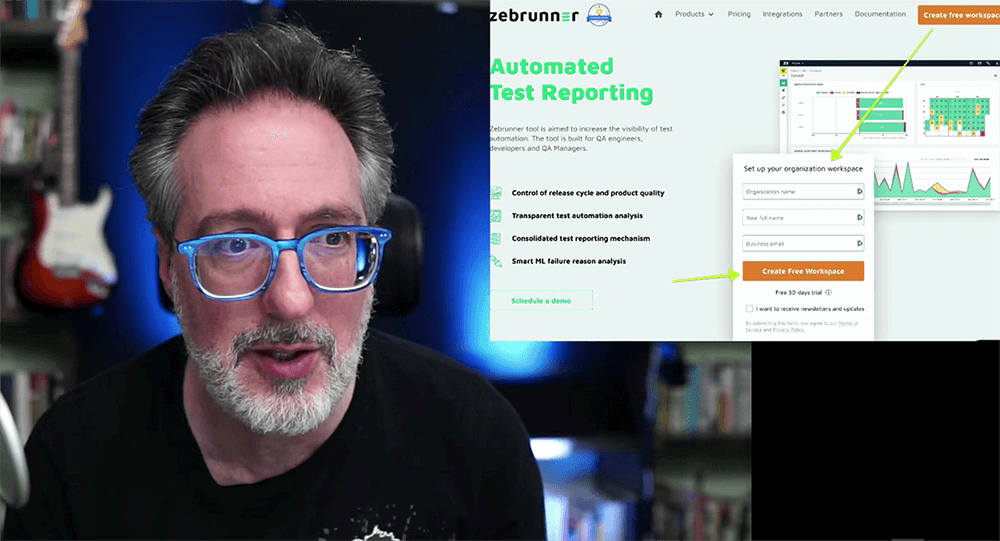

Because I didn't have the self-hosting infrastructure required to test it out, I tried their free, 30-day Cloud offering option to see what it does.

I'm using Java and Maven, so to get started with their trial, all I needed to do was create an account and add my info to an agent. properties file in my project.

I then added a few dependencies to my pom.xml file, and I was off and running. For detailed installation information, check out their documentation. Now let's take a look at some examples of automated test reporting in action.

Challenges in Test Reporting & Analysis

When it comes to automation testing, “failure” is a very broad term. You have to be certain about what is causing this failure. For effective test triaging, you have to do some classification first. Without classification, it's tough to understand where you should spend your time.

For example, if it's an application issue you should probably immediately file a bug and follow up with the developer to let them know that automation found a bug that needs to be fixed. If it's a script issue, you need to handle it differently.

And if it's already a known issue, what do you do?

Back when I was working for a large firm, when a bug was introduced during a sprint and folks knew about it, we still would run our tests after every check-in.

When reviewing the results, I had to remind myself that, the test failure wasn’t a real failure, but rather a known issue that the team was fixing.

I didn't have to worry about it right now, but I had to tag it in the code. It would subsequently become untagged, which was a nightmare. Having a way to classify failing tests quickly can help prioritize where to focus your time on the most important things first.

If you're doing this manually, you’ll typically spend countless hours going through application and test logs, stacked raises, responses from the back end, etc. But all of these can be processed by machine learning systems to help you to classify those issues automatically, without human intervention.

That means you can focus on what matters instead of spending your time manually analyzing those results yourself. Automated test results analysis is a must-have to scale your automation testing efforts.

Automation Test Run Artifacts in Zebrunner

Zebrunner collects the test automation reporting activity logs and screenshots all in one place and creates videos of the test run—making it possible to reproduce all the steps required to recreate the automation issue. You can also share the execution results in multiple test automation reporting formats like HTML, email, and with a shareable link.

It also stores the history of the test—known issues and past run results—saving you lots of time and detecting bugs earlier as you are triaging your failures. That means you can release faster, with confidence, on time.

Test Automation Flakiness Overtime Tracker

It's also critical that your team has confidence in your tests.

If they fail, it should be due to an actual issue and not a poorly written test.

Fixing flaky tests is critical to making sure no one loses confidence in your test results.

One way to accomplish that is to track the run history of previous execution of a test over time. This will help you weed out poorly written automated tests, and task your teams with fixing them as soon as possible. Another benefit of easily tracking a test run history is finding and fixing reoccurring bugs.

Let’s say that a previously failing test was recorded in your bug tracking system and later resolved. If you see the same error again a few months later, you can easily look back at what resolved it previously.

This speeds up not only the failure analysis process but the process of fixing the issue itself. Zebrunner also allows you to see all the failing tests that have the same root cause.

Get FREE Automation Testing Courses

Test Reporting Helps Collaboration

Besides helping you with time-intensive activities like troubleshooting failing tests, collaboration is another benefit of a well-planned test automation reporting tool. This is another area where I've seen companies fail with automation because they have trouble getting all their developers, testers, and upper management on the same page.

Fixing this goes a long way to improving toxic cultures at organizations that ignore failing tests in their CI/CD pipelines. You can use a reporting tool like Zebrunner as a shared space where your team can review results. Everyone can see all the artifacts related to the particular test execution to get an idea of what went wrong.

Having detailed reports also speeds up discussing the issue with your team and creates a transparent, open line of communication with them.

Central Automation Test Results Hub

In the past, I had to work with multiple sprint teams working on the same project who also had dependencies on teams outside of our group. Management didn't know what was going on with automation, how reliable it is, and how different teams were doing, testing-wise, with their automation efforts.

This was due to not having a way to combine and aggregate the results provided by different teams all in one place. That's where incorporating a reporting tool like Zebrunner with its execution reports can help keep everything in one place.

Using Zebrunner, I found one thing that makes them stand out from other solutions is that the data is live, and reporting is highly customizable.

How to Report Test Execution Effectively

Management can start using dashboards to make informed decisions in real-time to get a big-picture view of the quality of the whole development process, but you want to understand what is actually going on.

Management and your team can clearly see which tests are unstable, and which tests are flaky. The team then has a clear idea of what needs to be fixed, how to address those issues, and maybe allocate time into sprint planning to refactor some of the tests.

Data is aggregated and can be visualized in many ways. You can see how your team's test pass rate changes over time. It can help you to understand what the ROI of your test automation is.

You can also see how many new cases have been automated, and how unstable the existing test cases have been. This gives you an idea of where you need to invest your engineering time to fix those problems.

Test Ownership

Zebrunner also has a concept of test case ownership. You can correlate a test case and the automation engineer who is responsible for maintaining it, and basically keep track of how many test cases were covered by that engineer, how many of them were failing, etc.

You basically have some return-of-investment insights on how your engineers are producing results.

Automation Report Projects View

Your management team will also love Zebrunner’s projects feature.

The whole concept of projects is similar to the idea of projects in Jira.

For example, if you use Zebrunner in an enterprise-scale organization that works on multiple products maintained and developed by multiple teams simultaneously, you can easily separate contexts of those teams working on a product within a single workspace.

That's something I find most other reporting tools on the market don't have.

Integrations with Other Automation Tools

Another stumbling block I've seen with reporting automation test results is that the system storing the test run history isn't easily accessible in other systems.

Having a solution that has multiple, built-in integrations is a big help.

Zebrunner has created a bunch of integrations with the standard project management toolset, automation frameworks, and test case management tools like:

- Jira

- Slack

- GitHub

- Jenkins

- Circle CI

- Junit

- PyTest

- Appium

- TestRail

- QTest

Just to name a few.

If your tool is not listed, they also have an API guide to create your custom integration.

While I was looking around, I also discovered that Zebrunner is more than just a reporting tool.

They have added functionality to bring many aspects of testing together in one place.

Zebrunner has a built-in, open-source test framework, Carina, a mobile device farm, a managed scalable Selenium grid, and much more.

I may cover that in another article if there is enough demand.

Check Out AI Automated Test Reporting for Yourself

As you have seen, AI-based reporting can take your automation testing process to the next level.

As you also have seen, using a tool like Zebrunner can satisfy both your engineers’ and managers' needs.

But don't take my word for it.

Check out Zebrunner’s free 30-day trial and try it for yourself. Let me know how it works for you.

good onw

http://www.iautomation.in/

Test reporting is essential to measure the test performance and new age test automation tools like QARA Enterprise and TestStudio have advanced test reporting features.

Is the automation testing is the most important part for the automation company ? Or They can just run the automation without any testing?

Add predictability to the intelligent, automated test reporting – now we are talking! https://bit.ly/3ndGgeb

awesome article…