The times are a changin.’ Ask anyone that’s been in the QA/testing game for any length of time and they’ll tell you that the way testing was perceived in the last century is quite different than it is today.

That’s why it was fun to talk to testing expert Andy Tinkham on my latest Test Talks podcast episode, Modern Testing. Andy has started a philosophy of testing that touches on what he sees as dangers with how testing is currently perceived and what we can do to change it for the better.

How Has Testing Changed?

According to Andy, testing absolutely is changing, and we haven't even seen the full extent of it yet

With the rise of Agile methodology, the headlines we see about large tech companies getting rid of their testers, and the “Oh no — am I going to have get another a job?” type of articles are everywhere. But companies aren't getting rid of testing; they're simply getting rid of people who had the particular job title of “testing.”

All of these pieces are driving a sizable wave of change. We've got more sophisticated users who have more things competing for their attention, we've got app stores that make it easier for users to switch in some sort of commercial application space, and we've got shorter and shorter development cycles.

Based on Andy’s consulting experience, Agile is so well established that if we think about Geoffrey Moore's “Crossing the Chasm” gap, we're down to the Laggards.

Most places have adopted something that they call Agile — which may or may not resemble what other people call Agile — but the point is that they do have something in place.

Faster Release Cycles and More Complex Software

A common feature nowadays is a faster release cycle. Unfortunately, the ways in which we used to approach testing don't scale well — or at all — in those environments. We're also building more complex systems. We've solved the easy problems in software; we're trying different things, reaching farther, and building bigger systems with more interconnections, more integrations and more functionality.

At the same time we've got developers and other team members taking a larger ownership role in quality. While it's wonderful to see this happening, however, it means that some of the tasks that have traditionally been done by the QA teams the testing group are now being claimed by others.

We're finally getting something we've been asking for, but how do we adapt to the changes? How do we make developers more aware of quality, when we’re more accustomed to them throwing something over the wall with a basic “Yep, it complies!” if we’re lucky?

We've finally got more sophisticated automation coming through, as well — which, again, is cutting into some of the tasks that have traditionally been assigned to testers.

The current rate of change indicates to me that within five or ten years the tradition role of tester in a traditional quality assurance teams is at minimum going to look very different — if it even exists at all. I believe we're at a crossroads, and we really need to respond to it as an industry.

Why Do Testers Find Themselves in Trouble?

Andy and I have seen that there are testers and test organizations who are not providing enough value to their organization.

These individuals and teams are often seen as roadblocks; they may be doing the wrong things, or they may simply not be effectively communicating the value they're providing, and/or their value is largely unseen.

As a result, more and more QA teams and testers –those roles, those positions, those departments — are going to be cut. Businesses are going to be saying, “Why am I paying for this?” They can’t see the value, and as an industry we share a good chunk of the blame for that.

After speaking with Andy I was contacted by someone on who works for a firm that creates fitness monitors who has found himself in this exact situation. He asked me if I knew anyone that was hiring. When I asked why, he said, “There is a feeling here that having a separate automation department doesn't scale. Instead, they hire senior developers into the software test engineering department to coach, train, and mentor developers to write their own automated tests, along with the unit and component tests they are already writing.”

WTF?!? If you’re a tester you’re probably wondering, “How did we get into this situation?”

Testers have Caused Some of These Issues

We've done some things that have helped to create that kind of roadblock; essentially a person that needs to be worked around as opposed to a team member.

We've taken the concept of repeatability and put it up on a pedestal. We've said, “Repeatability is everything; everything must be repeatable. Everyone must be able to run this.”

By doing this, we've cut out the individual differences we bring. We're ignoring the fact that testing is a skilled and knowledgeable profession. When we say “anybody,” people hear “anybody.” Even if we mean any tester with a sufficient level of skill and knowledge, that’s not what people are hearing.

Instead, they're hearing, “Anybody can do this.” You hear the old, “I can just grab five people off the street to test this. Why do I need you?” We've done this to ourselves.

[tweet_box design=”default”]With equating repeatability as “good #testing” we've focused ourselves on dehumanizing work~@andytinkham[/tweet_box]

Quality is a Vague and Nebulous Term

Andy discusses how he’s seen testers that are extremely dogmatic in their process adherence. Their thinking is that since the process and test planning has been agreed on, it must be done that way, whether it makes sense or not.

It's no wonder that when faced with that kind of attitude an organization would try to work around it; they're striving to be more efficient, and the testers are acting as a roadblock to that.

We've also spent quite a bit of time operating under a shallow agreement. “Quality” is a pretty vague and nebulous term – each person sees it differently. It's very subjective, yet we all act as if we know what quality is.

Most people haven't ever been asked to articulate the answer to the question, “What is quality?” But it's just one of those situations where you know it when you see it.

Take a project manager, for instance. The type of things they're going to value as quality include being on time and under budget, whereas a salesperson values features that easily demoed and stand up well against the competition. They're not necessarily conflicting definitions of quality, but they're not identical definitions, either.

We talk about the concept of quality as if we all mean the same thing by it, and that can sometimes hurt us.

Testing Monkeys in a Cage

As an industry, we've become like that apocryphal study in which scientists put monkeys in a cage, then placed some bananas at the top of a ladder. Whenever a monkey attempted to climb the ladder, they sprayed him with a fire hose. The monkeys eventually stopped wanting the bananas, so they began swapping out the monkeys out for new ones one at a time. When a new monkey would try to grab the bananas the other monkeys would gang up on them and beat them up. It eventually got to where none of the original monkeys were left – meaning none of them had ever been sprayed with the hose – yet the monkeys all continued to beat up any new monkeys that went for the bananas.

There are things about which we as an industry have simply said, “This is good testing; you must do it this way,” even though we don't necessarily know what value it provides.

Testers as Lower Class Citizens

While we’re getting in the way of the process, we're also downplaying our skills. If I had a nickel for every time I’ve heard someone say, “I'm just a tester. I can't do that,” or something similar that downplays the knowledge and skill we bring to the team and the value of the actions we perform, I may not be able to retire, but I wouldn't be hurting for cash.

It's a common thing. We as an industry have internalized some of this lower-class citizenship we've been dealing with for decades. We then reinforce it with our actions by not pushing back when we should.

In clear cases in which we can add value, we've commoditized our skills. I see this quite often from a consulting standpoint, but there are a lot of clients for whom the determining factor when bringing in a consultant is cost. Their goal is to get a body into a chair for as little money as possible. When that kind of commoditization race exists, everybody loses. We all end up making minimum wage for this skilled and knowledgeable profession. It's becomes all about shaving another dollar off the rate.

[tweet_box design=”default”]As #testers we've commoditized our skills[/tweet_box]

We've done that kind of commoditization. We've focused ourselves on dehumanizing work wherein we give someone a stack of scripts with each action spelled out in detail, and we work through some sort of evaluation. Whether it's more of an official evaluation or more of an “Aren't you done yet?” type of evaluation, it encourages you to stick to methods that evaluate them by the number of scripts they can get through in a given period of time.

In a nutshell, we're taking the humanity out of the job.

We've been fed explicit instructions on what to do, and we've also implemented an evaluation system that guarantees people stick to the script. We could have robots do that!

Unfortunately, that is the extent of what many testers do. There may be some thinking done at the beginning but then, because of that enshrining of repeatability, it's basically just, “Here you go. Here's today's programming. Go execute it.”

So How do We Fix Testing?

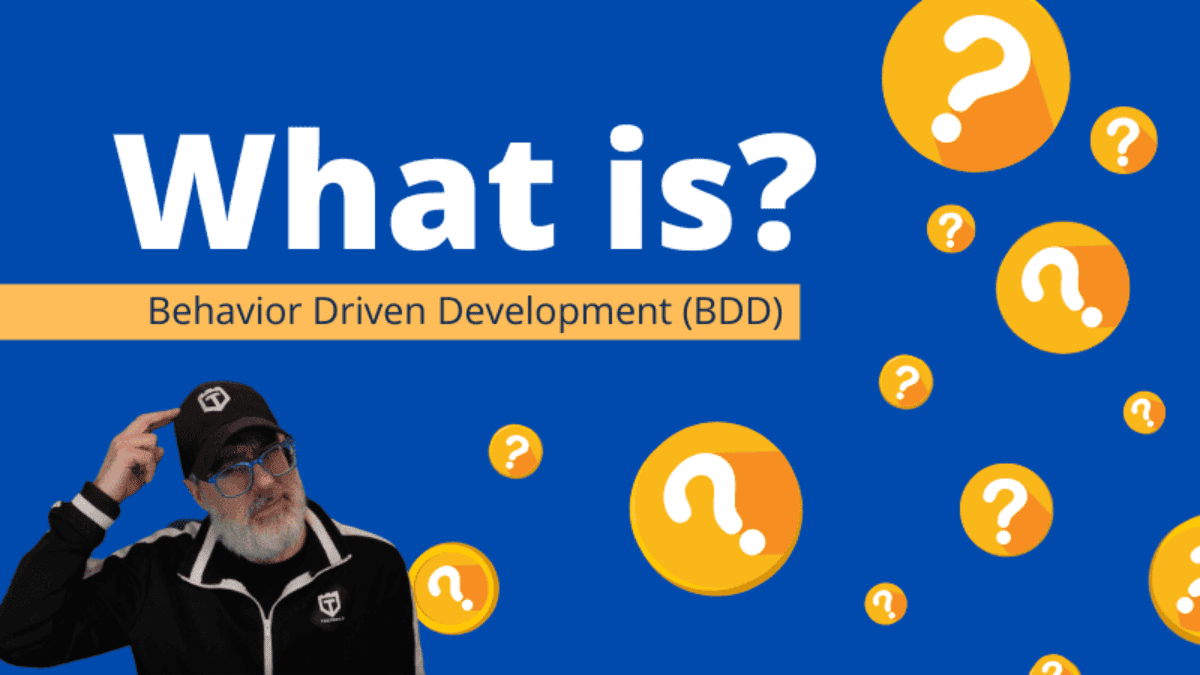

Within the philosophy of modern testing there are a series of principles Andy has broken down into four pillars: Context First, Testers are Not Robots, Using Multiple Lenses for Test Design, and Providing Useful and Timely Information. These principles can help us dig ourselves out of the situation in which we currently find ourselves.

To discover more about how the four principles work, listen here:

More Modern Test Awesomeness

| Joe: | Hey Andy, welcome back to TestTalks.

|

| Andy: | Thanks Joe I'm excited to be here.

|

| Joe: | Well it's great to have you back on the show. Last time we spoke was in episode seventy eight. I think you've changed jobs since then. What have you been up to, what's new?

|

| Andy: | I am the practice lead for a company called C2 solutions. We're a Twin Cities based consultancy. Our biggest practice is a QA and testing practice and as practice lead I am at the head of that. I get involved in everything we do. Whether that's some building work or supporting my other consultants in their delivery efforts, sales and recruiting, internally building a community, and externally growing C2's reputation.

|

| Joe: | Awesome. Today I would like to talk about your presentation called Modern Testing. First thing, what do you mean by modern testing? Had testing really changed in the past few years? What are your thoughts on that?

|

| Andy: | Absolutely testing is changing and we haven't seen the full extent of that yet. The rise of agile methodology, the headlines we see about big tech companies getting rid of their testers. Very oh no am I going to have a job type articles where companies aren't getting rid of testing they're getting rid of people who had a particular job title of testing. All of these pieces are driving a big piece of change. We've got more sophisticated users who have more things competing for their attention. We've got app stores that make it easier for users to switch in some sort of commercial application space. We've got shorter and shorter development cycles. I would say, based on my consulting experience, Agile is so far established that if we think about Geoffrey Moore's Crossing the Chasm gap we're down to the laggers.

|

| Most places have adopted something that they call Agile which may or may not look anything like what other people call Agile. They have something in place. A common feature is this faster release cycle and the old ways that we approach testing don't scale well, or at all, in those environments. We're also building more complex systems. We've solved the easy problems in software, we're trying different things, we're reaching further, we're building bigger systems with more interconnections and more integrations and more functionality. We need another pressure there to scale our testing on. At the same time we've got developers and other team members taking a bigger ownership role in quality. Which is great, it's wonderful to see this happening, but means that some of the tasks that have traditionally the peer view of the QA group the testing group are now being claimed by others. How do we adapt to that? When we're finally getting this thing we've asked for. For developers to be more aware of quality and not just throwing something over the wall with a basic, “Yep, it compiles!” If we even get that.

|

|

| Finally we've got more sophisticated automation coming through as well. Which, again, is cutting into some of the [inaudible 00:03:08] tasks that have traditionally been assigned to testers. I would say that the rate of change is indicating to me that within five or ten years the tradition role of tester, the tradition QA organization, is at a minimum going to look extremely different if it even exists at all. I think that we're at a crossroads and we really need to respond to it as an industry.

|

|

| Joe: | I definitely agree. Almost everyone that I speak to now, they're having the same issues. I don't know if it's a misunderstanding of what a QA tester role is or what someone with those skills does? I've been hearing for the past few years, “QA is dead, testing is dead.” I really don't see that. I see people with those skills doing more stuff but, like you said, not necessarily having the title of Manual QA Tester or something. How do you think that's going to impact us in five or ten years? I've also heard that eventually we're not going to be developing because it's going to be so sophisticated that people will just be able to generate code. I know that's not going to happen but it's this story that we hear over and over again that, “You're going to be replaced, you're going to be replaced.”

|

| Andy: | I think there were two questions there. The first piece, the QA is dead the testing is dead piece. My take on that is that is an extent of a sensationalism of the same thing that I'm talking about. The testing activity isn't going away but there are people that I've met in the course of my career who are very mechanically focused in the way that they approach their job. There's a very, I wouldn't say mentality, but an external perception that the role is insert one requirement get one test case out over and over and over. You just turn the crank and get outside test cases based on that.

|

| There are testers, there are test organizations who either are not providing enough value to their organization. They may be seen as a roadblock, they may be doing the wrong things, or they may simply not be communicating the value they're providing and the value is largely unseen. More and more those are just going to be cut. Those roles, those positions, those departments. Businesses are going to be saying, “Why am I paying for this?” They can't see the value. As an industry we share a good chunk of the blame for that.

|

|

| There are things that we've done that have made us that kind of roadblock, that kind of somebody to be worked around rather than a team member. We've take the concept of repeatability and put it up on a pedestal. We've said, “Repeatability is everything, everything must be repeatable. Everyone must be able to run this.” By doing that we've cut out the individual differences we bring. We've cut out that testing is a skilled and knowledgeable profession. When we say anybody people hear anybody even if we mean any tester with a sufficient level of skill and knowledge, that's not what people are hearing. Now they're hearing, “Well anybody can do this.” You hear the old, “Well I can just go get five people to come in off the street to test this. Why do I need you?” We've done that to ourselves.

|

|

| We've also pushed back as organizations have tried to change to become more effective. I've seen test that are extremely dogmatic in their process adherence. Where this is the one process we agreed on and we must do it this way and it doesn't matter that it doesn't make sense right now this is the process and we've got to do it. It's no wonder that when faced with that kind of attitude that an organization would try to work around that because they're trying to get better. They're trying to be more efficient and the testers are working as a roadblock there.

|

|

| We've spent a lot of time operating under a shallow agreement. Quality is a very vague and nebulous term. It is not the same to each person, it's very subjective but we all act as if we know what quality is. One of my roles at C2 is interviewing potential candidates who might join my practice and be placed on our clients. I love to ask them what quality is because I never get the same answer. There might be some variance on informist requirements or suitable for purpose. The classic Dran and Demming type answers but nobody phrases it the same way. Even if they're getting at those concepts unless they use those specific words that Dran and Demming use. Most of the people haven't ever been asked that question or they've never had to articulate what quality is. It's just one of those things where, “Well we'll know it when we see it.”

|

|

| If you think about a project manager. Things that they're going to value as quality are things like on time, under budget, nobody yells at us. Whereas what a salesperson my value are features that easily demo, features that stand up well against our competition. They're not necessarily conflicting definitions of quality but they're not necessarily the same definition of quality either. We talk about this quality thing as if we all mean the same thing by it and that sometimes hurts us.

|

|

| As an industry we've become like that apocrypha scientific study about the scientists that put some monkeys in a cage and then put some bananas at the top of a ladder. Any time the monkey tried to climb the ladder they sprayed them with the fire hose. The monkeys stopped wanting the bananas so they started swapping out the monkeys one at a time. Anytime a new monkey went for the bananas the other monkeys would gang up on them and beat them up. Eventually they got to where there were none of the original monkeys and none of them had ever been sprayed but they all continued to beat up new monkeys that went for the bananas. That's not, apparently, actually a real study. I went looking and Snopes says it's fake but the idea there illustrates what I'm talking about. There are things that we as an industry have just said, “This is good testing, you must do it this way.” Even though we don't necessarily know what value it provides. Nope, we have to do this.

|

|

| At the same time that we're doing that, getting in the way of the process, we're downplaying our skills. If I had a nickel for every time I heard someone say, “Well I'm just a tester I can't do that.” Or something else that downplayed the knowledge and skill that we bring to the team and the value of the actions we do. I might not be able to retire but I wouldn't be hurting for cash.

|

|

| It's a common thing. We as an industry have internalized some of this lower class citizenship that we've had to deal with for decades. Then we reinforce it with our actions, we reinforce it by not pushing back when we should. When there are clear cases where we can add value. We've commoditized our skills. I see this a lot from a consulting standpoint but there are a lot of clients for whom the determining factor with the person that they'll bring in as a consultant is who's cheapest. Who can get me a body in a chair for as little price as I can? When we have that kind of commoditization race everybody loses. We all end up making minimum wage for this skilled and knowledgeable profession. It's just, “I can shave another dollar off that rate.” It's like the automated systems that set prices on Amazon. Where all of a sudden you find this book where several sellers are selling for ten bucks and then there's two that are selling it for five thousand dollars. They've used the same algorithm of go one penny higher than the previous highest one. They just bounce off each other and rack the price up. Those vendors are never going to sell those books because everybody else is selling them for ten bucks.

|

|

| We've done that kind of commoditization. Again with that repeatability, we've focused ourselves on dehumanizing work. Wherein we give somebody a stack of scripts with every action spelled out in detail and we work through some sort of evaluation. Whether it's more of an official evaluation or more just a aren't you done yet type of evaluation. That encourages them to stick to those scripts that evaluates them by the number of those scripts they can get through in a period of time. We're taking the humanity out of the job. We've both been fed explicitly what to do and we've put in an evaluation system in place that guarantees that people stay on the script. We can have robots do that. For a lot of testers that I've seen over the years that is the extent of what they do. Maybe there's some thinking at the beginning but after that, because of that enshrining of repeatability, it's, “Here you go, here's today's programming. Go execute it.”

|

|

| Joe: | I definitely agree. I particularly see this more often I think than people, maybe not. I'm in a business where it's heavily regulated by the FDA. What happens is people say we need objective evidence and they throw that around meaning we need a script and we can't deviate from the script and we need to follow it step-by-step and then record every single expected and actual output and that's what we do. They get so fed up and the think, “Well, we'll just automate all of it because obviously it needs to be objective evidence. The only way to get that is to record it and have it in a log file and script it and be done with it.” I think it takes away the true value of what a thinking person can do to actually find real issues with your application.

|

| Andy: | Yep, absolutely.

|

| Joe: | I think you have a few areas that you talk about within your presentation to get us over this type of thinking. I think the first one is context first. What is context first?

|

| Andy: | Let me back up for one second because there's one more piece that I want to cover before we get into the pillars of modern testing. This is a philosophy. This is not a methodology, it's not “What is the new version of good testing.” This is a way of thinking about what good testing is. It is probably still going to evolve and it is entirely built on the work of a bunch of smart people who've influenced me over the years. This is my synthesis of their work, my own combining and fleshing things out.

|

| A few months ago I was out in Washington state. James Bach and his brother John did a “Reinventing Testers” workshop. I don't want to go into a lot about the workshop right now. Rob Sabourin was there too. Rob's a university professor at McGill, does a lot of stuff in the testing community, and a really great guy. Early on in that workshop we were talking about, “What is a reinvented tester?” The definition that Rob came up with matches very much with what I think a modern tester is. A modern tester is one who understands the essence of testing. It's moving beyond, “Some guru says I should do this so this is what I should do.” It's not just, “Well I know I should do regression testing.” It's really understanding the essence there. What are we trying to accomplish? What are we doing? Why is this thing something that we can even consider doing? Then knowing how to apply their skills as the context changes around them. What are team is trying to achieve and what it needs to do that is changing. Recognizing that changes constantly and really applying those skills, applying that knowledge.

|

|

| Within the philosophy there are a series of principals that I'll get to late because I've distilled them down into four pillars. The four pillars are: Context First, Testers are not Robots, Uses Multiple Lenses for Test Design, and Providing Useful and Timely Information.

|

|

| You asked about context first. Context, much like the context driven test in school that we talked about last time. Much as they emphasize all of these pieces that define the project, the people involved in it, the user population that we're developing for, the processes that are in place, missions of the test group. Are we trying to check an app prior to submitting it to an app store? So we're just working through stated guidelines to make sure that there's no obvious failures that they're going to bounce us right away. Or are we working in that regulated environment where if we miss something people could die?

|

|

| The mission of the test group is very much going to inform what we do. The business objectives of the organization are also going to. Are we trying to get a product out first? Are we trying to present ourselves as the most reliable option? These are going to impact what good testing is. We're also going to be affected by the specifications that we have available. Requirement, youth stories, back of the napkin scrawlings, random hallways conversations that are the only time a feature is ever discussed. All of these sorts of specs change what we can do and affect what good testing is on the project. The resources we have available. Do we have a test lab? Is it shared? Does that matter? That again is going to affect us as well as all of the deadlines that are in place. If we have a deadline of a week from now. We can't do as much testing, probably, as if we have a deadlines two months from now. All of these things combine together to make up context.

|

|

| Traditionally what I've seen is a tester has an understanding in their head of what good testing is. They come into a context, or the context on their team changes, and they're trying to jam their idea of good testing into that context. Sometimes it works well and other times it doesn't but the definition of good testing came first in that. In modern testing which is what I'm calling this philosophy that I'm promoting the understanding of the context comes first and then that defines what good testing is in that context at that moment in time.

|

|

| Joe: | Awesome, once again I definitely agree with this and I think that this is one of the problems I've been facing is we've been moving from waterfall to agile. The context now is changed yet a lot of people are trying to do the waterfall processes that doesn't fit in now with the context that we're working in. There's a constant conflict now. Instead of saying, “Look, I know what good testing is let me apply it now to this new context and help drive that process rather than becoming a roadblock and trying to jab something down that doesn't fit.” Is that your main point?

|

| Andy: | That's the first pillar, yeah. There are other pieces around that too. Get the understanding, use that to define what good testing is but it's also avoiding best practice thinking. A piece that comes directly from the context driven stuff. We may have talked about this actually the last time that I was on. The term best practice is a limiting term. It shuts down thinking whether a practice applies in a given context. It already has the term best in it. What is better than best? There is nothing. Best is the superlative. It's got that hidden view built right in that, “Well if it's a best practice it must be something that we should be doing.” That's not necessarily true. There are certainly good practices in context. I would even go so far as to say for a particular team in a particular time in a particular context there is a best practice potentially for them. It is not an industry wide best practice. It is not necessarily even a constant practice. It is the thing that the team gets the most value out of doing at that moment in time.

|

| Maintaining that objectivity, that ability to look at it and say, “That's an interesting idea and I see that it worked over here and over there but that's not like my situation. That practice might be good for me, it might not.” Recognizing that distinction and not just saying, “Yep, must be good going to do it.”

|

|

| There's also the idea, we talked about the shallow agreement. The, “We mean different things.” That's part of the context as well. Making sure that we're all working towards the same missions and objectives. The same definition of what a quality product is. That really is that whole first pillar.

|

|

| Joe: | The second pillar then is testers and not robots. You touched a little bit on this already. What's your definition of we're not robots?

|

| Andy: | This is a broad answer here. There are multiple pieces. The first piece is recognizing that an individual tester brings skills. They bring knowledge, they bring experience. The way that you test a feature and the way that I test a feature might not be the same. That can be really good because I might find bugs that you'll miss and vice versa. It also recognizes that if we're doing all that test design up front and then keeping the scripts and passing them off to a robot. Whether it's a human or a computer. When we design those scripts we are at the point where we know the least about the functionality that we're testing that we're going to know in the entire test cycle. We're going to learn more as we work through it, as we see how it actually works, as we come up with more experiments to try. Recognizing that because we're human we're doing this and building pieces into the process, whether it's through a session based exploratory testing model or using automation in combination with manual testers gives us a much better ability to capitalize on those skills that testers bring.

|

| Another piece that has come out of this, and I'm a little hesitant to say this on a show that mainly focuses on automation, I think we've enshrined automation too far. That's where we get the automate everything type of discussions where teams of people are often figure out how to change how they work to make it easier for automation. Whether that's our automation engineers don't know the system well enough so they have to have the precise steps. Or we're going to have to tell the computer the precise steps so let's do them now. Or let's completely change how we store this data so we can easily suck it in through programming code. There's a place for that, there absolutely is a place for that. Having started as an automator myself having that enshrining of automation has benefited my career, absolutely. I think that when that comes at the cost of downplaying that human element it costs us something. I'm no longer convinced that having automation always be the penultimate thing, the best thing, we're trying to get to all automation is the right way to go. Absolutely we need automation but we need to use it smartly. We need to use it in combination with human efforts in order to support what is ultimately a human activity.

|

|

| Joe: | Yeah I'm all about automation and I don't think that most people, most companies can succeed without it and that's not what you're saying. You're not saying no automation whatsoever. It's the context you're in and what makes sense for your team. I definitely agree with that. I like the point that you brought up that two testers won't necessarily find the same bug. They may go about testing differently so therefore they'll uncover different bugs than one another. I think what happens is when you do follow a set script, or just have an automated script that does everything and you rely on that, you get into unintentional blindness where you're so focused on a certain piece of functionality that you overlook other bugs that may be happening around you because you're so zoned in on this one little area. I definitely agree that is danger almost especially if you're doing medical applications or things like that. Even if you had everything automated, and you could automate everything, you'd be in trouble because you're not looking at other scenarios that you're not focusing on.

|

| Andy: | Computers are really bad at noticing the unexpected because we didn't know to tell them to look for it. It's unexpected. People are really good at that much of the time. While it is very contextual where the line is there is for most projects a combination of manual testing and automation that make the most sense to achieve the goal. I have seen teams where they are so focused on the stuff that could be automated that they never do the other things. That again ties back to that testing is dead mentality where it quickly becomes just a question of, “I could invest how much now to create automation for that or keep paying six people's salaries for how long to keep running these by hand?” That becomes a financial trade off and not one of value at that point.

|

| Joe: | I think we also touched on this next point last time but what is the multiple lens view that you talk about?

|

| Andy: | For me a lens is a metaphor for a mental model. We have a number of models we can create for the system we're working on. Requirements or user stories or whatever our specifications are give us one perspective on that system. They give us one mental model. If we really think about how analysts are doing their work, how developers are doing their work, they're thinking about how the system should function. They may break off and think of error conditions or what should happen when something goes wrong. For the most part the specifications are, “This is what it should look like when it works.” That gives us a mental model that's biased towards this is the way that the system should work. That's an important model. We absolutely need to have that model. We need to test against it, we need to make sure that the system works as it's intended to work but the bias that gives us in the test cases gives us an incomplete view of the landscape of possible tests.

|

| Just like if we were looking through a telescope versus a handheld magnifying glass some things would be emphasized between the two lenses and some things would be obscured between the two lenses. Different lenses, different mental models give us different sets of tests. Another lens we might use would be one of risk. Not risk at a … There's a chance at … Living in Minnesota fishing season is a big thing here. It's not a risk that all six team members are going to get sick magically on the first day of fishing season or that level of project risk. This is a deep functional risk. For this feature that I'm testing. How can I imagine that it might go astray, that it might go wrong, that it might do the wrong thing? Then how would I know if that was the case? How can I turn that into a test case? That's going to give us a different set of tests than one where we work with requirements. The risk focus step is also going to be biased. It's going to be biased much more to the edge case. What happens when we get off into the weeds type tests.

|

|

| We can look at the system from another perspective. We can look at it from a security perspective for example. We could say, “Where is there data that a hacker might be interested in obtaining? Where are there weak points in the communication between our components that somebody could tap into and observe what's going on?”

|

|

| We could look at it from a performance lens. Where are the potential bottlenecks? What could I do that might lead to the system choking? One project that I worked on as for syringe pumps for hospitals. The sort of pump where you put a syringe in, hook it up to an IV, and over time the pump slowly pushes the plunger down on the syringe to give a very slow and constant application of whatever is in the syringe. These syringe pumps were connected to WIFI. They'd sync back every ten seconds, or something like that, and here's how much should be left in the syringe and standard operating data. One of the things we found when we were looking at it from a performance standpoint was what would happen to the back end systems if the hospital WIFI went down. Networks what they are sometimes they go down. What if we were down for a week? All of these pumps which have been accumulating updates to send every ten seconds for a week decided that when that WIFI came back up that they were all going to send that data back to the back end. It would be really bad to have that crash the back end and we also wanted to know how long it would take for the back end to catch up.

|

|

| That's a test that we wouldn't have come up with if we just used the requirements lab. We had to look at the performance and how the data flowed through the system and were there places we could get a bottleneck to think of that. Moving beyond just using requirements which is a place where I see many testers just stop. They're very much in that mechanical, “I put a requirement in, I get a test case out. I'll work through all that when I've tested all of the requirements and I'm done.” I would say that a huge number of candidates that I talk to describe that as testing. Modern testing needs to move beyond that. We need to move beyond those tests. Which if we're focusing on the same aspects that the whole team has thought about all along anyhow they are the least likely tests to find bugs. Which doesn't me we still shouldn't run them, they're important tests. We need to thing about the other areas that bugs could be and bring that in. That's a place where we can add huge huge value to the team.

|

|

| Joe: | Awesome, I love this pillar. For some reason every time you talk about it I think about Einstein and a thought experiment. That's what it almost sounds like. You're thinking of things outside the box. You're coming at it from a different angle and that's where a lot of times you find really interesting cases like you brought up.

|

| Andy: | I can't imagine doing tests without this now. It is so much the chance to be creative. To think through all sorts of different aspects. There are several places where you can find various lists of quality attributes. Wikipedia has a list, [inaudible 00:29:41] and some colleagues somewhere in Europe. I should know where they are. I want to say Sweden but I'm not positive there. They've got a two page PDF of all sorts of attributes that could be lenses. Even Elisabeth Hendrickson's Test Heuristics Cheat Sheet, particularly the heuristics piece. There's things like Goldilocks and Crud. All of these things give you different models on the system. That's the fun part.

|

| Joe: | Very cool. The last pillar is usable and timely info.

|

| Andy: | That comes back to that testing, at it's heart, is an information providing activity. Most testers I know aren't going into the code base and fixing the bugs they find. They're no designing the application up front although they might be in some of those meetings. They might certainly be reviewing the results of those meetings as well. At it's heart as a test team we are providing information back to our stakeholders, back to our team to inform them what we know about the quality of the system. So that they can make decisions like, “Is it time to ship? Are we at a good enough level where we can start talking about things?” Or whatever information they need. That's going to be very contextual as well drawing us back to the context first pillar.

|

| What we've traditionally done in our reporting is we've given our team, we've given our stakeholders, raw data. We give them counts. We say, “For this feature I have seventy two test cases. I ran sixty five of them and sixty passed.” There are so many interpretations of that data. First, why do we have seventy two test cases? Maybe we should have had seventy five and we just didn't have time to write three of them. Maybe we should have had two hundred and we haven't had time to write a lot of them! Maybe we only needed sixty in the first place and so we have extra test cases. Once we get passed that, why did we only run sixty five of them? What about the other seven were they not important? Were they the most important tests and so all we know is that cosmetically the feature looks good because we have sixty five tests written for that. The seven tests that do the functionality, the reason that exists, haven't been run yet. Are the seven tests just broken and we don't care about them? They don't matter? There's so much there. Only sixty passed. Well were they the sixty least important, the sixty most important?

|

|

| We could be in really good shape with those numbers or we could be in really bad shape. We don't provide the interpretation of the data. We just say, “Here is the raw data. I'll just put it on the table. The worst cases I've seen are … Here's every metric I can think of, calculate, or grab out of my test case management system. Here's a bunch of numbers.” That does a huge disservice to our team. It decreases the value we provide because the value is inherently in that information. It means that if we're making our recipients do all of that interpretation work we again undermine ourselves with that, “Well, anybody can do this. How hard is it to go look in the test case system and say there are seventy two records in this list.”

|

|

| In modern testing part of understanding the context first is understanding what the information needs of the team and stakeholders are. It's not generally going to be, “How many test cases do we have?” Although they might frame it that way because many managers have been trained over their careers that that's how you get information about quality. When it comes down to it most managers probably don't care about the number of test cases. They just use that as a surrogate measure for more test cases equals more testing. What they really are concerned about is things like, “If we release now how many phone calls are my support center techs going to get? How bad are the headlines going to be if we have a problem? How much is this going to impact my bonus?” Other pieces of quality than just the metrics.

|

|

| What we need to do first of all is provide more context around our data. Michael Bolton talks about having three stories that we provide when we're providing information. First we're talking about the quality of the application itself. What have we seen work? What have we seen not work? What can we extrapolate from what we've done to include other situations where it might or not be. That's not enough in and of itself. If I'm your tester and you're a stakeholder and I say, “The product is good.” That's all I tell you then you have nothing to evaluate that on other than what degree do you trust me.

|

|

| The second story that comes in is what did I do to get that story of the quality? If I say that the product is good I logged in and I got to my homepage. That's not a very thorough definition of good in most situations. That's probably pretty weak testing in nearly every context. Unless you're making a login dialogue widget or something maybe. If I tell you that I went through and looked through a requirements lens, I looked through a risk lens, I looked through a security lens, I looked through a performance lens, I've picked the highest value test cases form all of those lenses, I've thoroughly executed all of the top priority test cases, and everything came out the way I expected it to. That's a deeper level. Now you've got more ability to evaluate my first statement of the product is good because you have that information about what I did. That's still not enough because most of the people that we're communicating too aren't testers. They don't have a testing background. They don't have the ability to say, “Was that the right amount of testing in this case?”

|

|

| That's where the third story comes in that is the third story of the quality of the tests. That's where we start talking about things like, “How much have I covered? Why did I do what I did? Why is this enough testing or not enough testing? What's needed to get more information? What got in my way as I was trying to get through things? Are there places where I couldn't get to evaluate?”

|

|

| All together these three things, you've got the evaluation of the product, you've got the ability to evaluate how much trust to put in that based on what I've done, and you've got the ability to evaluate what I've done based on the reasoning and what else would it take to get more information. It gives a much clearer picture. That's one piece of the usable and timely information.

|

|

| The other piece of the usable side is using tools from data science. Things like graphs. Ways of presenting the data that aren't just, “Here's a raw number or even a set of raw numbers and go interpret them as you wish.” There are ways to better convey the message that we're trying to convey with those numbers.

|

|

| When people ask me … I just had a LinkedIn message this morning … Someone asked me about my thoughts on, “Do testers have to code?” There are career paths for testers where absolutely testers have to code. If you're going into automation, that's development. You would be very well served knowing how to code in that. If you're in an automation path. There are career paths where it could be helpful but there might be a chance that you end up thinking to similarly to the developers and you all miss a bug. It certainly give you an awareness of how programs work, of how computers function, and that can be very helpful in designing test cases. Then there are also paths where I don't think you need to know how to code at all. Data science is one piece of that. I think that as we focus on this information providing piece of modern testing there's more and more room for people who know how to present data and know how to analyze it for patterns. We need to mine the deep insides of the data that we're not, as a team, hitting right now. I think that's another path that's open to testers and it doesn't require coding at all.

|

|

| That's the usable side. The last bit is timely. It doesn't do anybody any good if you sit on the information for three weeks to try to get to the end of the test cycle. There are pieces of information that the team needs right now. There are pieces of information that giving the team right now would be too soon. They wouldn't be able to use it. The information would go stale before it's needed. Recognizing the combination. Recognizing what are the actual information needs my team and stakeholders have now and how can I address those and adapting as those needs change is the final piece of that puzzle.

|

|

| Joe: | Okay Andy, before we go, what's the best way that someone find or contact you?

|

| Andy: | You can either do it via email, email gets my attention pretty quickly most days. andy@C2its.com. Cornerstone 2 Information Technology Systems is what the acronym stands for. Twitter where I'm Andy Tinkham. T-I-N-K-H-A-M is my last name. On various podcasts around the world and maybe someday I might be on again.

|