A few weeks ago on one of my TestTalks podcasts, I interviewed Janet Gregory, co-author of some of the most popular books on Agile Testing:

- Agile Testing; A Practical Guide for Testers and Agile Teams

- More Agile Testing: Learning Journeys for the whole team

Janet shared some of her tips and tricks on how teams can succeed with all their Agile testing efforts.

The three points from the interview that I want to focus on in this post are:

- The Agile Mindset

- The Agile Testing Quadrants

- The Dangers of putting off automation

Agile Testing is a Mindset

First it takes a certain mindset, with respect to how you approach testing, to get everyone involved in testing. On an Agile team, everyone is now in it together; programmers test and testers should do whatever it takes to help their team create the best possible application.

So how do we make it so that Agile testing becomes part of our company's culture? You need to make sure that the whole team responsible for delivering a feature understands how much testing is actually involved.

“Testing” not only refers to testing the software after it has been delivered to the tester. It's also about testing ideas, testing assumptions, and getting prompt feedback all the way through.

To get your whole team thinking about testing, you have to raise awareness. You have to make testing visible. You have to start be able to talk about what testing actually means.

Having these conversations early on in the development process is one of the most powerful things your team can do to create an Agile testing mindset.

One way to aid in creating conversations and building an Agile testing mindset is to use the Agile Testing Quadrants.

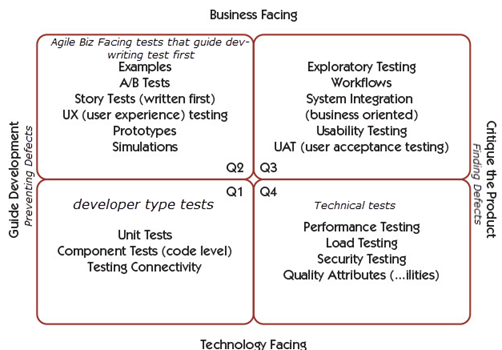

Agile Testing Quadrants

In the book More Agile Testing,

“the agile testing quadrants (the Quadrants) are based on a matrix Brian Marick developed in 2003 to describe types of testing used in Extreme Programming.”

Basically, the authors take this quadrant model and make some small tweaks to reflect how it works within Agile.

The quadrant model is separated into four main areas:

- Q1 – Technical tests that guide development

- Q2 – Business tests that guide development

- Q3 – Business tests that evaluate the product

- Q4 – Technical tests that evaluate the product

These quadrants are a nice way to show Agile teams the types of testing that needs to be thought of during development. This helps makes things more visible and helps to start conversations around how much test coverage and efforts you are actually putting into testing a feature.

One of the things that people struggle with (and one of the reasons why More Agile Testing was written) is how to include all these types of testing into their definitions of done and still have time to complete everything.

Janet recommends that we start thinking of things in layers:

- Story Level

- Feature Level

- System Level

What can we test at the “story” level to get that story to done? If we start thinking at a higher level we have the idea of a feature, which has many stories. The “feature” level is what a business user really cares about. At the top is the “system” level. So, some of the tests in the quadrants belong to the story level while other tests belong at the feature level — not at every story level.

The teams are responsible to have the conversations to get them to start thinking about how, where and at what level some of these quadrant- level concerns will be addressed.

Also, the sooner the teams are aware of the types of testing that may need to be performed, developers can start to think about the types of hooks that will need to be added into order to make their code testable/automatable.

For an in-depth discussion on these quadrants, check out Chapter Seven of More Agile Testing.

The Dangers of putting off Automation

I started my conversation with Janet around test automation by asking her how one knows what to automate and when it makes sense not to automate something. She repeated a term that was used throughout our talk: “technical awareness”. One needs to be technically aware enough to be able to articulate the benefits and cost of test automation.

If we as testers can't articulate these types of things, it makes it hard to go to our managers and tell them why something can or cannot be automated — But that doesn't mean we should ignore automation altogether.

For some reason, some testers still resist test automation. While some of their concerns may be valid, Janet believes that an Agile team is sustainable over time if you don't have some type of automation. It might be just having a base of really good unit tests, but you have to have something, because you want that timely feedback.

In Agile development, everything changes so fast that unless we have the safety net of a regression test suite running every day, we really don't know what we broke. That's why it is so dangerous to put off automation.

The reality is that you cannot ignore test automation without it coming back to haunt you later on.

More Agile Testing actually has a really vivid example of this in Matt Barcomb's Legend of the Test Automation Volcano. I don't want to spoil it for those of you who haven't read it yet, but the crux of it is that eventually everyone pays for putting off test automation!

From More Agile Testing Awesomeness

To hear my full conversation with Janet Gregory, check out TestTalks Episode 34. And be sure to add her two must-read books to your testing library:

- Agile Testing: A Practical Guide for Testers and Agile Teams

- More Agile Testing: Learning Journeys for the Whole Team

Very cool post Joe. I am very excited to listen to this podcast and hear all the opinions.

Thanks, You write

In Agile development, everything changes so fast that unless we have the safety net of a regression test suite running every day, we really don’t know what we broke. That’s why it is so dangerous to put off automation.

This makes sense , but what happens on a newer product when the software changes so much and I have to spend a day (because I’m not an expert) or more rewriting the regression test, then get behind on all my other manual testing, bug verification, etc. ? I think There should be one person dedicated to automation who can make those changes

True its tricky. Sometimes if an application is changing so much that your automation code is not reliable then you might have to hold off and maybe be a sprint behind. Also when ever possible try to automate at the middle/API layer. APIs tend to be more stable and don’t change as often as a UI