Does your company practice some wicked test automation anti-patterns that make your skin crawl? Does it feel like you’re surrounded by mindless, automation-ignorant zombies?

If so, get ready to grab your silver bullets, crucifix and holy water as we take a look at the horror of four major automation anti-patterns. You’ll discover how to drive a wooden stake into the heart of these evil practices and rid your tests of them forever.

1. Record and Playback

This is an old-school anti-pattern that was more common when everyone was just using vendor-based testing tools. Back in the day, I started off with a tool called WinRunner. One of the features that was always talked about by software testing tools vendors was Record and Playback. It would be advertised as if you could just record your test script and play it back. Simple and easy.

Shortly after getting the tool in house and starting the recording, however, you realized that yeah–Record and Playback really wasn't going to work.

This particular approach hard codes every single action and value you enter into a script. Often when you're recording a test flow, different things come up that may not be handled in the original script, so you have to go in and modify it.

I've never had a positive experience with just recording and doing a playback of a script. There's always some sort of maintenance needed, and they tend to be pretty unreliable over time.

I can understand it if someone wants to use a Record and Playback tool just to learn an application and see how the objects are identified, but after that I really would recommend that people don't use this feature.

Selenium’s IDE allows you to record and can help you identify some objects in your application. You can then take that recording and export it to a scripting language, like Java or C#, and you can also modify it.

Truthfully, I've found over time that it's actually a lot easier to just learn how to program the API directly and not have to even deal with Record and Playback. It adds too much overhead, and in the end adds more time than it actually saves you.

That's the first anti-pattern you should be frightened of. You should keep it in its grave, and don't go digging there. Just leave it alone.

2. Not planning your test framework approach before you begin coding

This may seem obvious, but sometimes when you're in the middle of a project and you have a deadline to meet, no matter how bad it is or how hard it is to maintain, just to get something out the door.

But you really would benefit from doing some high-level test planning before you do any type of automation; it will definitely save you time in the long run.

The planning stage might be as simple as how you’re going to set up your project structure within your IDE. Where are you going to place your files? How are you going to break up your files? What is your directory is going to look like? What tools will you use for automation? Does your project test both a web browser and also Rest services? What tools/libraries will you use for that?

It's really, really important that if you're working in a larger group with multiple sprint teams and you're responsible for test automation, you first get this high-level vision in place so that as you roll it out to the other teams, everyone's on board and doing the same thing.

This is going to save you a lot of time and headaches, because if people aren't aware of what the vision is or what the standards are, they're going to start doing things however they wish, which will make it hard to follow some of the tests they write because it's going to differ from the other teams’ written automation.

You definitely want to have a standard so that the test looks the same no matter what you're testing, and no matter which team is using the framework.

Having a plan acts like garlic to ward off flaky, blood-sucking test scripts.

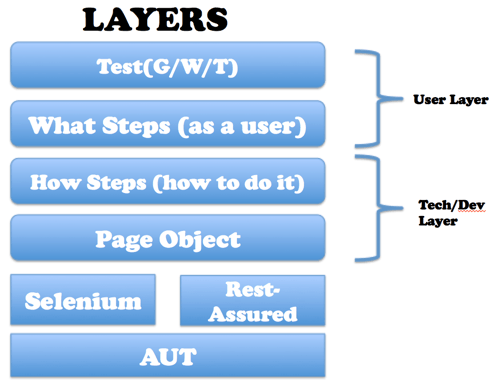

3. Not making your test framework maintainable by separating your framework out into layers

This is actually a continuation of planning your test automation framework, but it takes considerable effort — long after you've planned the test automation framework. You really have to keep on top of this piece.

The best method I've found to keep tests maintainable is to use a layered approach.

In the first layer, you want to make sure that your tests are not written like code, but rather in a nice, high-level API using the same terminology as your application’s users.

At this level, you shouldn’t write your test like you would normal Java code; rather it should be at a high level where you've already created some test methods that really abstract the lower-level, technical details out into a lower layer.

You know you have a proper first layer if a non-technical member of your team can look at the test and say, “I totally understand what's going on.” The goal is to not need to be a developer to know what's happening at this level.

That's your very first layer — what I call “what steps?” (what a user wants to do). It's a layer that really makes it so your tests will be more maintainable and less brittle later on down the road, because your test is separated out from the technical details that actually implement that information.

In this high-level layer, an example might be if you worked at an insurance company so your “what steps” API method names might be something like rate_policy or add_car_to _policy. Your high-level layer will then call lower level layers that actually implement that user friendly API you created.

The next layer is what I call “how steps” (how to do something). This is where the actual technical details — the Java code — would live, and where you would have your methods and parameters defined. It is also where you would include the implementation details on how to do something such as clicking and hovering — mouse/keyboard stuff.

How steps call another page object layer.

A page object is a class that models an application, or a portion of your application. This is where we actually have the lower-level, technical details of how to identify a field.

The great thing about creating your framework in layers is that if you have a test called Add Car to Policy and one of the fields change within your car screen, you don't have to touch any of your test.

All your tests would point to this one page object where you would go and make that change one time only, so that if you had 1,000 tests and you had to change a field name because the field name changed, you would only have to go to that one page object and make the change. All your tests would run then, and you wouldn't have to worry about going through every single test to make the change.

That's just one of the major benefits of separating your tests into layers.

If you want to learn more about this approach, there's a really great Zephyr webinar I guested on with Dave Haeffner and John Sonmez called Getting Test Automation Right that discusses it in more detail.

So don’t create another Bride of Frankenstein automation framework – use layers!

4. Treating automation as a checkbox for your definition of “done”

I think this might be one of the scariest anti-patterns. It’s basically a recap of all the other points we covered. In a nutshell, it’s that test automation shouldn’t just be a checkbox you use as your definition of “done.”

You really want to be creating tests that add value to the process.

You want to have test automation cover as much as possible. At the same time, you want it to be quality automation. If something cannot be reliably automated, then don't automate it. If something doesn't make sense to automate, don't automate it. Don't use it to simply pad your metrics when you run your code coverage. You should be creating automation that is actually going to help you to create a quality product.

Automation should ultimately be one of the tools within your quality toolbox that allows you to eliminate some repetitive tasks, and frees you to do more exploratory-type testing where you can really use your testers to look at the application and really drill down to answer the question, “Is this working as a user would expect it to behave?” That's really the only way you're going to be able to obtain that type of coverage.

Automation is important. I believe in test automation one hundred percent, but it shouldn’t be the only tool you use to create a quality product.

If you're told otherwise, it should scare you.

Spot on Joe. I had not even thought about number Four. I think there is a Number Five: Scary changes introduced by HTML5 architecture objects making odd use of classes (Image class standing in for a Checkbox) and common unique identifiable properties, (Name or HTML ID) being blank or generalized. Is it just me or is this becoming the norm?

Good point Paul! I think that a lot of javascript frameworks generate dynamic fields without unique ids that developers can change but takes a little extra effort.

Great observations, Joe! Keep up the good work.

I’d like to expand upon point #4.

In their haste to get automation completed and show value, a lot of teams either overlook or turn a blind eye to test data & configuration maintenance. Often times, they don’t realize how it affects the reliability of the scripts until they begin to fail and become a maintenance burden. Or maybe they just don’t know how and / or have determined that it’s an SEP (someone else’s problem). Regardless, they’re saddled with a bunch of junk that diminishes the value of the framework and the tests it executes.

Having a system to maintain and recover your test data is a must-have, but in addition to that, you really also need the means to restore all test environment configuration mecessary for the tests to execute reliably. After all, configuration allows the AUT to process your test data. Without it, your tests are at best unpredictable, if not outright useless.

So in short, don’t forget your data and testing environment configuration.

Great article…. love the way it is written.. well done

Not just technical mumbo-jumbo

Thanks Vaughn!

My new favorite… 100% Automation. They set out to try to automate 100% of their tests. That along with the mythical metric of test coverage by automation. As Mark Twain once said (I think this is right)… “There are lies, damned lies and statistics”.