About this DevOps Toolchain Episode:

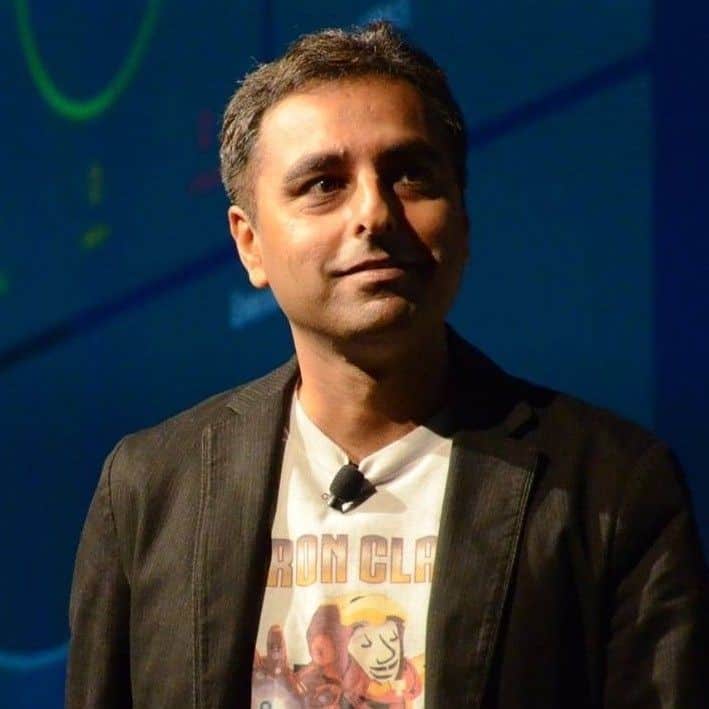

Today, we have a special guest, Harpreet Singh, the co-founder and co-CEO of Launchable. Harpreet joins us to discuss an exciting frontier in software testing—using machine learning to predict failures and streamline the testing process.

Imagine a tool that can intelligently shift tests left in the development cycle, prioritize the most critical tests based on risk, and notify you via Slack when issues arise—all while continually learning and improving.

This is precisely what Harpreet and his team have achieved with Launchable. Their platform integrates seamlessly into CI/CD pipelines and provides engineering teams with valuable insights to speed up the process of identifying and fixing test failures.

In this episode, we'll delve into how Launchable aids in catching issues earlier, the profound influence of AI on the testing landscape, and real-life examples like BMW that illustrate the solution's effectiveness. We'll also explore Harpreet's advice on adopting a targeted approach to AI in DevOps and how their solution alleviates cognitive load, saving developers time and effort.

Try out SmartBear's Bugsnag for free, today. No credit card required. https://links.testguild.com/bugsnag

TestGuild DevOps Toolchain Exclusive Sponsor

SmartBear’s BugSnag: Get real-time data on real-user experiences – really.

Latency is the silent killer of apps. It’s frustrating for the user, and under the radar for you. It’s easily overlooked by standard error monitoring. But now SmartBear's BugSnag, an all-in-one observability solution, has its own performance monitoring feature: Real User Monitoring.

It detects and reports real-user performance data – in real time – so you can rapidly identify lags. Plus gives you the context to fix them.

Try out SmartBear's Bugsnag for free, today. No credit card required.

About Harpreet Singh

Harpreet is the Co-Founder/Co-CEO of Launchable a solution that leverages AI and machine learning to enhance software testing by providing intelligent diagnostics for test failures. Their platform helps DevOps engineering teams to quickly identify and classify test failures, reducing the cognitive load and speeding up the process of finding and fixing underlying issues.

Before starting Launchable As VP of Product, Harpreet helped CloudBees find product market fit by bringing in an Enterprise version of Jenkins to create a successful business based on OSS that scaled to multi-millions in ARR. He seeded product and design teams, was deeply involved with sales engineering, and established a successful conference called DevOps World to push the discussion of DevOps forward. As GM for Atlassian Bitbucket, Harpreet helped set the blueprint of what became the strategy at Atlassian to embrace DevOps tools in the market. He got a first hand look at the famed Atlassian-Product-Led-Growth motion.

Connect with Harpreet Singh

- Company: www.launchableinc

- Blog: harpreet.io

- LinkedIn: www.singhharpreet

Rate and Review TestGuild DevOps Toolchain Podcast

Thanks again for listening to the show. If it has helped you in any way, shape or form, please share it using the social media buttons you see on the page. Additionally, reviews for the podcast on iTunes are extremely helpful and greatly appreciated! They do matter in the rankings of the show and I read each and every one of them.

[00:00:00] When a QA person comes into our office tomorrow morning and the night the test are done, it's like he's coming to a crime scene and they're like 500 dead body there. And we are trying to provide as much forensics data to this guy or girl to help them sort of figure out who's the killer. Like, what's the problem?

[00:00:19] Get ready to discover some of the most actionable DevOps techniques and tooling, including performance and reliability, for some of the world's smartest engineers. Hey, I'm Joe Cole Antonio, host of the DevOps toolchain podcast, and my goal is to help you create DevOps toolchain awesomeness.

[00:00:37] Joe Colantonio Hey, to be talking with Harpreet Singh all about Intelligent Test Failure Diagnosis, and why it's going to help you accelerate delivery into DevOps pipelines. If you don't know, Harpreet is the co-founder and co-CEO of Launchable, which the solution that leverages AI machine learning to enhance software testing by providing intelligent diagnosis for test failures has a bunch of other features as well. Their platform really helps DevOps engineering teams to quickly identify and classify test failures, reducing the cognitive load and speeding up the process of finding and fixing underlining issues. So if you want to know how to remove bottlenecks in delivery, optimize your tests, filter out noise, analyze problems, and track fixes to help you release software with confidence. You really don't want to miss this episode. Check it out!

[00:01:28] Hey, if you're app is slow, it could be worse than an error. It could be frustrating. And one thing I've learned over my 25 years in industry is that frustrated users don't last long. But since slow performance isn't sudden, it's hard for standard error monitoring tools to catch. That's why I think you should check out BugSnag, an all-in-one observability solution that has a way to automatically watch for these issues real user monitoring. It checks and reports real-user performance data in real time so you can quickly identify lags. Plus, you can get the context of where the lags are and how to fix them. Don't rely on frustrated user feedback. Find out for yourself. Go to bugsnag.com and try it for free. No credit card required. Check it out. Let me know what you think.

[00:02:20] Joe Colantonio Hey, Harpreet. Welcome to the Guild.

[00:02:24] Harpreet Singh Thank you so much for having me here. Really appreciate that.

[00:02:27] Joe Colantonio Awesome. Great to have you. So before we get into it, I'm always fascinated by founders on, why? Why they created the solution. You have a great background with a bunch of different things, a lot of DevOps. So why Launchable? Why did you create Launchable? What inspired you and Why and AI-driven test failure diagnosis platform to begin with?

[00:02:47] Harpreet Singh Right. There's a bit of a long story there. My background started with Jenkins. Sure you've heard about that tool. Interestingly enough, it was started by my co-founder. He created Jenkins, and he was sort of hunched over in a cubicle at sun. They had offices and he coded that, and I was around, and I was like, I was an engineer. Were just transitioned to product management. And this is pretty interesting. Seems like it has going to have a massive impact on software delivery. Cut short, I spent maybe eight years helping build a company called Cloudbees, which takes Jenkins to market. I built that business and as me and my co-founder, Kohsuke were part of this company. He's the creator of Jenkins. We came to this place where we were sort of helping a lot of people do DevOps transformations. Jenkins is the sort of backbone of which software is delivered. And we realized that despite sort of helping people kind of do that, it was still taking them ages to kind of ship software. And that didn't seem great. And we started talking and we were like, okay, where is the bottleneck in software delivery? And the answer popped up to us. It's really in the test. What happens is you write all this, you create all these pipelines. And what pipelines are a series of test suites connected one after the other, giving you greater confidence in software, in the software that you deliver. And that's the tests kind of keep growing. They take time. And every time you push a change, no matter how smooth your pipeline is, you're going to spend time doing that. We sort of honed in on that as a way of saying like, there has to be a better way of delivering software and cutting this huge cost out from that delivery process. And that's essentially what got us started with the company.

[00:04:53] Joe Colantonio Awesome. So I was this is another podcast you were on. I think you got into AI maybe 20 years ago or more, and you said you were too early, but yet I think you Launchable about four years ago, which was still I mean, AI was around obviously, but it's before the ChatGPT and generative AI really took off. So it seems like you were right at the sweet spot of that taking off. So how do you know it was the right time to launch the solution with AI then?

[00:05:17] Harpreet Singh I think I was still too early actually, to be honest, starting the company. But as we looked at this problem and we went like, there has to be a better way, cannot be the same old ways of doing things. And in my past lives, I had sort of tried to communicate to my leadership that we should be sort of doing something with AI, and it was always seem like you're too early for that. And when me and coach sat down and we go, like, there's so much data that is coming out from the exhaust pipeline of the software delivery process, and it just seems to be thrown away. Nobody is using it. And now this is 4 years back. Like we could look at this all this exhaust and we could throw this data to machine learning and see what comes out at the other end. And that was sort of the second leg of our company hypothesis. The first one was like, we can go in and like, look at this and optimize this and that process that seems to be a problem in the second is the way we would do that is use machine learning and AI and go solve that.

[00:06:27] Joe Colantonio Love it. So you mentioned that obviously how often the bottlenecks in your software delivery pipeline. So how does Launchable specifically address that issue? How does it like enhance the delivery speed?

[00:06:38] Harpreet Singh Yeah. So code changes that are coming in. And we can predict what tests are likely to fail based on these code changes. We look at a bunch of other signals as well. 40 or 50 other ones. And what that lets us do is instead of waiting, say, an hour for your test suite to go finish and then you in a minute, 50 some test fail. We can actually straight up go to the minute 50 test, bring that up and execute that first. And so you get the feedback right away that your build is going to fail. So rather than kind of wait rule for the a signal to come back to devs, you can almost get that like in the first few minutes. I say like about 80% of your tests are pointless. You just don't know which ones. And we can find which ones are the most important ones from them upfront. And that feedback goes to your dev. And like that can drastically reduce the turnaround for dev being secure.

[00:07:42] Joe Colantonio Rather than waiting to production it's early in. And you shift it more left when they check in code it runs the more important tests that you know could fail or tend to fail more often?

[00:07:51] Harpreet Singh Yes, the tests that tend to fail based on the changes that are coming in. The test that could fail or tend to fail more often would be in that mix as well. That's where we started off. And it's a risk-based approach to testing. The one way we thought about this is like SREs are fairly risks they look at the risks in the system. So we're bringing that approach here. So you kind of go in and say like well my risk to reward threshold is x. I want 90% confidence in my test results. And we will give you a subset of tests that will match that. I want 10% confidence. And we'll give you the test up match that. That's one part of the solution that we built and that's been running in production for a few years.

[00:08:40] Joe Colantonio I love this because usually, I used to work at a health care. We'd get like a hotfix we'd have to release right away. And so they check in code and we don't know what test do we run out, run all of them. And some would fail. And they had nothing to do with the check done. But we had no confidence in knowing what caused that issue. Does this help you with that as well then? Like you know like if I check in code A, that test Z, and F will cover that check in. How do you know that if it does that?

[00:09:04] Harpreet Singh Yeah, absolutely. That's really the basic premise of that piece of software. The way we do that is over a period of time, we look at the code changes that are coming in and we look at the tests that are being run against them. And we build a machine learning model part of that. And the model now like after a point can look at changes coming in and say like, okay, if you did this, it's likely that test 1515 are going to fail, right? And the model then predicts start and kind of gives you 1515 and you execute 1515. And you get that signal back to make it a little more sort of simpler and intuitive, like let's say Harpreet was an engineer. He no longer is. It's been a while since it's coded. And suddenly one fine day he pushes changes in and the model seems like you've just built a .... and then .... back again and pushes the changes in. Fairly obvious that it's likely that it's going to make the build again. You're kind of looking at those kinds of signals that are coming out from your test runs and your code and building this model and predicting that.

[00:10:19] Joe Colantonio That's awesome, because once again, I worked with like 8 to 10 sprint teams, and it was always like two of them that were always breaking the build. It sounds like this one no, okay, team Sopranos just checked in code. Gotta run these tests that usually have to do with their check ins.

[00:10:35] Harpreet Singh Yes. So it kind of goes down to the dev levels and to the level of a developer. And you can kind of come in and predict what test need to run. It does really well that sort of mapping, regression tests, and integration tests, which is where a lot of complexity comes in. And like lots of people are checking in on a non-trivial project. Hundreds of people are checking things in and running integration tests, and it's always a problem trying to figure this out. So your main line is always right. And we can actually come in and say like, well, you can intelligently shift this test left. That's one way that we get use. So let's just say, let's contrast this like people put together smoke tests. And somebody has cherry picked a bunch of tests that they think are important and they are running regardless of what's happening. So let's say the authentication infrastructure is being run and there's one test there, but you're still running your database connection tests and so on and so forth. Now what this approach lets you do is sort of build a dynamic smoke test. What happens is, oh, you've made a bunch of changes to the authentication infrastructure and looks like the more authentication related tests need to be run more. Database kind of test need to be done. We can actually the model can actually automatically pick those and help you execute those. And so you can now take these and like shift these test much earlier in the life cycle if you wanted to. And so it's intelligently shift this test left and you catching issues much earlier in the cycle.

[00:12:16] Joe Colantonio I know when we talk about test, a lot of times people take a functional automation. But I think you mentioned integration testing. So it's all types of testing unit integration. It could it be performance, security, does it matter or is it? What you could sort of test I guess that it can handle.

[00:12:28] Harpreet Singh It doesn't matter actually.

[00:12:29] Joe Colantonio Really?

[00:12:30] Harpreet Singh The reason for actually choosing machine learning was that we could be applicable to any type of tests and test suites, wherever they are. We don't do performance tests. That's the only exception, what we look for. So this is like a since this is a machine learning model, it's essentially classifying whether the test would fail or pass fail pass. Performance test have tests have multiple outputs. So we don't do that. But otherwise it doesn't quite matter what tests are using.

[00:13:05] Joe Colantonio Awesome. So how hard is this to integrate into your CI/CD pipelines then? Is it like an agent that's like a sniffer that knows where you checked in? And how does it know for production? Is it learning from production to know what is risk based like? How does that all work?

[00:13:19] Harpreet Singh It's actually very simple to integrate. The way we integrate it is it ends up in your CI tool and there is like a Python script Python program that we've written that gets integrated into your CI script. So once you push some changes in, you point us to your source code repository. And we know what changes have been done there. And then once you run the tests, send us the test results. So we know which tests were done and how did they perform. And then the model will start. These are like you know about two lines of code changes in your CI script. It takes about like 30 minutes to run. I often joke that it takes me two weeks to find the person in a customer's account to kind of come up and do this, but it takes about 30 to 40 minutes to do that. And then when you're about to execute the tests, you just call Launchable and say, hey, Launchable, I have a 10-minute threshold. Can you find me kind of going back to your earlier problem? Okay. A hard fix is coming in. I have 10 minutes to check that. So Launchable, give me all the tests that you think are likely to fail in this 10-minute window. Find and package and squeeze as much juice out as you can and give me the highest probability of failing test. And we return that to the you. The three lines or four lines of changes in your CI script. And then the other thing that we did was we actually don't look at the code. We look at the metadata from the code. So your code remains with you, your test remains with you. We are looking at code and test metadata. And when the results come back from us which is hey, Launchable give me x, y, z test. Those tests execute in your environment. Really nothing changes on your end. The test we are not asking you to migrate your test to us. They continue to run wherever they are. All you see is like suddenly you are getting failures way, way faster than you did in the past.

[00:15:20] Joe Colantonio Awesome. I mean, when I think of machine learning, I'm very a novice. I assume it takes some time to really get better and tweak itself to learn over time. When someone implements is how long until you get like results that you could be fairly confident in?

[00:15:35] Harpreet Singh The model tells right away like and it starts predicting right away. But we tend to ask customers to wait up two weeks before we tell them to turn this on. And from there on, it's fairly good to go. And the model of the beauty of the approach as well is like the model always keeps learning. So any changes that are coming in today, you are in, say, the authentication piece of the code based model. You might be somewhere else. It kind of follows you pretty well and then it can predict very well. So that's really the machine learning piece of that takes about two weeks.

[00:16:11] Joe Colantonio All right. This might be a dumb question. What happens if you don't have a test for a risk area? Does it also bring a bubble up insights? Hey, you're running a bunch of tests, but the check in has no coverage. Does that ever happen?

[00:16:23] Harpreet Singh No, that's a actually, it's not a dumb question. It's an often asked question. We don't cater to that because we were like, hey, you have enough test coverage tools out there that we don't need to reinvent this problem. And people have test coverage tooling already set up. And so we don't cater to that. Where we work with it is like if you have a reasonable sized code base, reasonable sized test suite, these tests are automated. We come in and help you there.

[00:16:53] Joe Colantonio All right. So obviously, you're saving time because you're shifting things earlier in the process. And rather than running a ton of tests, you're targeting just the tests that relate to the code checking. Can you also assume then, that obviously if people are using containers and ephemeral environments, that the cost is going to go down because they're not running all these tests as well as add another plus to this type of solution?

[00:17:14] Harpreet Singh Yeah, that's a great insight, actually. That's one common use case that people have, which is well, I was running all our test execution budget is testing. If you kind of latch on to the 80% of your test or pointless, intentionally inflammatory comment. But if you latch on to that, you're like, I don't need to run this all the time. Why don't I just not do that? That's one way where people reduce costs. Another a counterintuitive insight that happens is they go like, okay, now I'm running like 5% of my tests. I don't need to run my entire test suite. But now I can run these 5% tests all the time. Instead of waiting for my nightly test to run, I can run like a five minute run or a ten minute run every hour. And I'll find and catch issues every hour instead of nightly build. And I might actually be executing more tests because of this approach, in the same course that I was earlier spending to run that one massive test suite.

[00:18:19] Joe Colantonio All right. That's a great point. So we used to run a test at night. The whole test suite would come in. We get failures. We assign it to people this automatically. Oh, I guess it also does it alert the right people then that it does it knows, hey, not only do the test fail, but you're the one that's responsible for this.

[00:18:35] Harpreet Singh Yes. You're actually latching on to something we built on Slack notifications, where we are sort of using this data and notifying individuals right away saying like, hey, you broke the build. You don't have to go and hold your CI server and see. Did I break this build and did I break build? That's the one piece you've done. You didn't ask this for the other piece that we've been spending a lot of time nightly building is this notion of triaging issues, right? You ran the builds, you came in the next morning, and the first thing you see is a data dump of like 500 test suites. What we discovered with our customers is they were using the test to get this feedback much earlier, but really what they are trying to do is get a handle on like their triaging activities. As we saw that one of the things that we so what we're doing here is I call this intelligent test failure diagnostics is all right, I have 500 failures. Are they all one issue? Somebody disconnected the database here or there 500 underlying issues. Can we collect them? And this is where we are throwing this to LLMs. And we can come back and classify these failures for you and say like, all right, instead of these 50 failures are this one underlying issue. And we've uplevel that issue component. And so you can go look at that particular issue. And if you fix that it's going to fix the 50 test failures. The lot of the cognitive load that happens when you're debugging these kinds of failures is like you start looking at the log of test one, and you look at the log of test two. And then by fifth you've kind of given up and you go like these seem to be related. Let me go fix those. Right. So we are sort of bringing in AI to augment that process.

[00:20:30] Joe Colantonio I mean that's a big game.

[00:20:32] Harpreet Singh And so that's another piece.

[00:20:34] Joe Colantonio Yeah, that's a huge piece I think because when you come in and you have a thousand tests, 500 failed rather than go to each of them knowing, hey, if I fix this one thing, it'll fix 499 of these is huge because someone's checking in code and their tests are kicking off and you need to do it really quickly. So that really helps get to that.

[00:20:51] Harpreet Singh Yeah, absolutely. Yes. I think it's going to be a game changer in terms of helping QA. And engineers get to the bottom of issues. And as part of that story, we're also sort of helping bubble these issues, you know, build a dashboard. And then our customers are asking ways for them to assign to particular team members or teams. And this is where your question started off. And so we're building stuff that says, all right, .... Joe on team X or across this problems that we assign to them. And they can go figure it out and fix it from there.

[00:21:31] Joe Colantonio This might be another weird question is when you started, you said you used to try to pitch AI to a company you're working . That's too early. How hard is it now that you have a solution that AI-based, that's AI driven, how hard is it to cut through the noise? Because everyone's saying their AI, their machine learning? Is that still an issue? Like to educate people, hey, this is real and this can help. Is that something you struggle?

[00:21:53] Harpreet Singh I certainly. There's, I'll give you a very interesting story how the dynamics have changed. The first two years I pitched a product, people would look at me and go, like, AI, this is not for you. I actually know somebody who came on a call from one Bay area company and said, like, well, I don't believe in AI and I think I can build something like this over the weekend. Like, all right, cool. Good for you. And then suddenly, like, people are now coming in and they have a mandate from their office, we need to do something with the AI. Go figure it. And now they're going in every tool of AI. And so it's quite a different change. And now, what's working is people are leaning in, you can't be that guy saying that I don't believe in AI. So that has changed. But then it sort of becomes very interesting talking to people. And as they're trying to cut through the noise of AI, one of the things I have found is like people have a very hard time understanding sort of things statistically. And so when you talk to them about AI and you talk about like, okay, this is what the model is doing, and you show them the statistics and how you evaluate models and these curves, they don't understand that, right? They want to know like, oh, but in this particular instance, what did your AI do? It's like, no, no, that's not how it works. Right? It's not like I can go in and explain every individual decision. There is to your question, people are trying to get to understand like, okay, what AI about you? And that's and I think they are doing that across various tools.

[00:23:30] Joe Colantonio Nice. You've mentioned a bunch of different features. What it's doing? How you're adding on to it? It's been 4 years. So I mean are there anything else that it helps with that we may have missed or that you're working on like the future of AI and DevOps? So any new features you could see coming into play soon on your roadmap?

[00:23:49] Harpreet Singh One of the things that we have worked on and it's available in our product is this notion of flagging unhealthy test and test suite to people. Most test suites, when they get big enough, they have a bunch of flaky tests that's causing friction. We can. It's not AI just to be clear, it's like statistics. We can come back and say, like, these tests are the most flaky and this is how much time it's costing you. These tests are the ones that most fail. We're trying to give engineers the data, go back to their product management and say, like, we need to fix these tests instead of building the nightly build that you're asking. That's one component that we are working on, we have worked on. But like where we are headed towards is that triaging process, right. So the example that I gave you on the triaging is really where we are taking the company. The thing that we did, which is helping you auto classify each failures. That's something that we learned in somewhere in the next week or so. But as we sort of brought this to our customers, they're coming back and saying like, okay, you know what? I actually would love it if you sort of can tell me whether these tests are whether these failures are caused by code or caused by a human or caused by tests. And there's a lot that can be done there to kind of make it intelligent. So one thing that we today do when we launch it is like an anecdote about this is like when a QA person comes into our office tomorrow morning and the nightly test are done. It's like he's coming to a crime scene. There are like 500 dead body here, right? And we are trying to provide as much forensics data to this guy or girl to help them sort of figure out who's the killer, what's the problem? There's a lot of forensics data that we can keep adding to it. A straight up example of this is we can sort of on your test failures. When we create these issues, we can tell you, hey, these tests are flaky. They are flaky. I don't really need to look at it. Maybe I just need to rerun it. But hey, this test is never failed. If that never failed, then I should be really going deep into it and so on. That's how we are helping that person kind of get a handle on failures.

[00:26:14] Joe Colantonio Love it. And back in the day when I had to do this, I used to have to tag things as a teen meme and quarantine this because I notice it runs three times. But you're coming up from a statistics perspective, it sounds like were even that part is not manual anymore. Am I understand that correctly, where I don't have to then start tagging everything and like.

[00:26:33] Harpreet Singh Yeah, we can just order classify and tell you. So make it really clear. It's like, okay, here's a top 10 flaky tests, and here's how much time each flaky test has cost you in your organization. And here's a prioritized reading where you can go and look at it and then you can click on, say, the top flaky does and kind of go into the forensics of it and go like, okay, this showed up in test session 1, 15, 20. And it's been kind of showing up in the last 5 tests. It's really something's gone wrong here. The flakiness has kind of increased and so on. We can graph that out for you and just make it really intelligent. Make the AI do then I don't know what's the best way to put it. Just make it intelligent so that you can just focus on the problem versus digging through under the nooks and crannies to see what's going on.

[00:27:27] Joe Colantonio All right. So a lot of people listen, this is probably this sounds great. Maybe, a little too good to be true. You have been around for a while now. So do you have any case studies or anything in that like of actual statistics of like you've saved x amount when you've implemented your solution or anything like that?

[00:27:41] Harpreet Singh Yeah. So you can go to Launchableinc.com/customers. And there are a bunch of case studies out there. And we have customers like BMW been using us for a while now and using that and as part of their delivery process. And there's a bunch of others out there. So yeah.

[00:27:59] Joe Colantonio Awesome. Okay, Harpreet, before we go, is there one piece of actionable advice you can give to someone to help them with their AI DevOps efforts? And what's the best way to find or contact you?

[00:28:10] Harpreet Singh All right, so let's start with the best way to contact us is Launchableinc.com. There's plenty of writer up out there that tells you how AI can we use. We have a very targeted approach. I remember I was watching one of your earlier podcasts in which the person was giving a very nice advice which is big one problem that's causing a lot of friction and just pick that and go and solve that rather than kind of boiling the ocean. My biased answer is most organizations, test are a huge problem executing them. And so you might be if that's an area, then you have a targeted approach to kind of going and looking at that. Right. So that would be my advice.

[00:29:00] Remember, latency is the silent killer of your app. Don't rely on frustrated user feedback. You can know exactly what's happening and how to fix it with BugSnag from SmartBear. See it for yourself. Go to BugSnag.com and try it for free. No credit card is required. Check it out. Let me know what you think.

[00:29:21] And for links of everything of value we covered in this DevOps Toolchain Show. Head on over to Testguild.com/p149. That's it for this episode of the DevOps Toolchain Show. I'm Joe. My mission is to help you succeed in creating end-to-end full-stack DevOps Toolchain Awesomeness. As always, test everything and keep the good. Cheers.

[00:29:43] Hey, thanks again for listening. If you're not already part of our awesome community of 27,000 of the smartest testers, DevOps, and automation professionals in the world, we'd love to have you join the FAM at Testguild.com and if you're in the DevOps automation software testing space or you're a test tool provider and want to offer real-world value that can improve the skills or solve a problem for the Guild community. I love to hear from you head on over to testguild.info And let's make it happen.

Sign up to receive email updates

Enter your name and email address below and I'll send you periodic updates about the podcast.